The Curious Case of BNN

Rephrasing news articles from major outlets is much easier and cheaper than actually hiring journalists all over the world

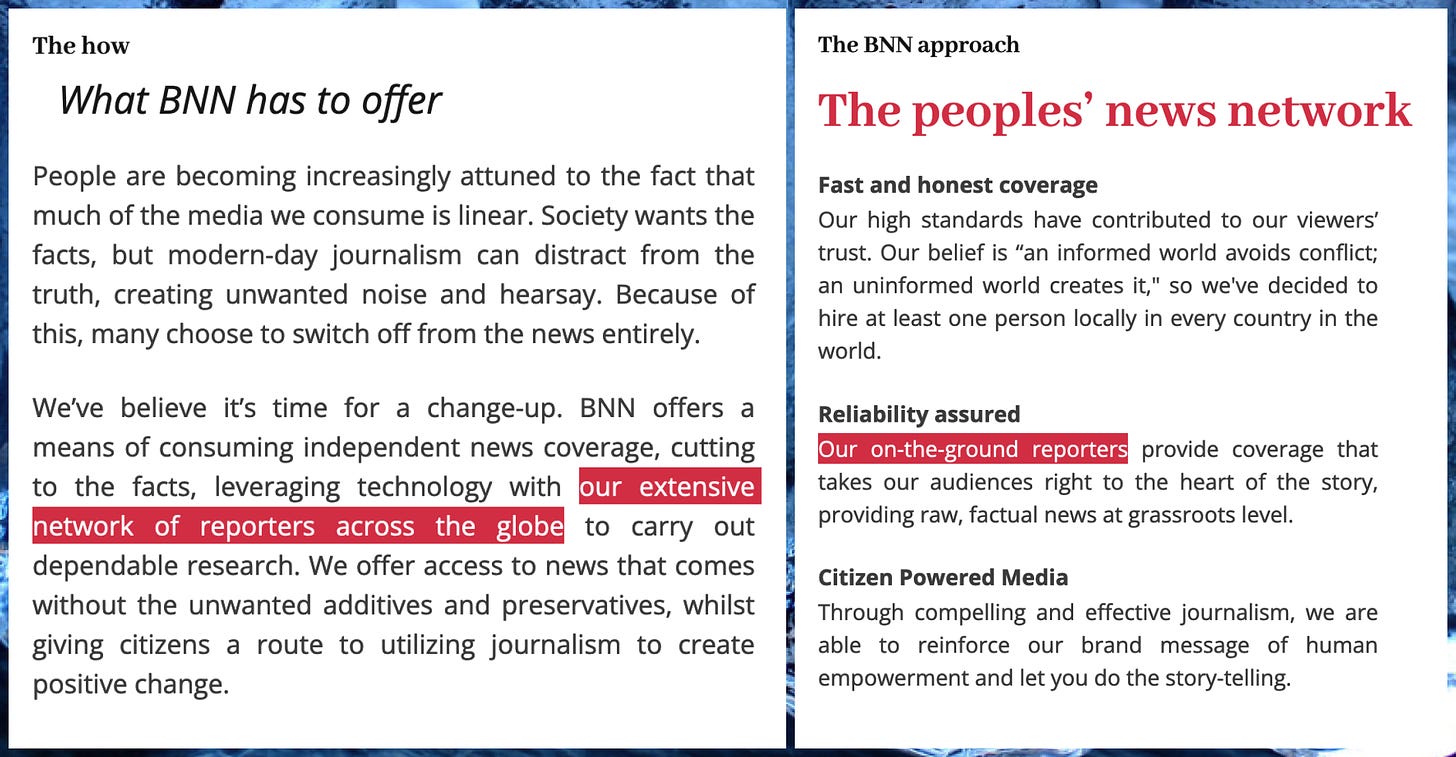

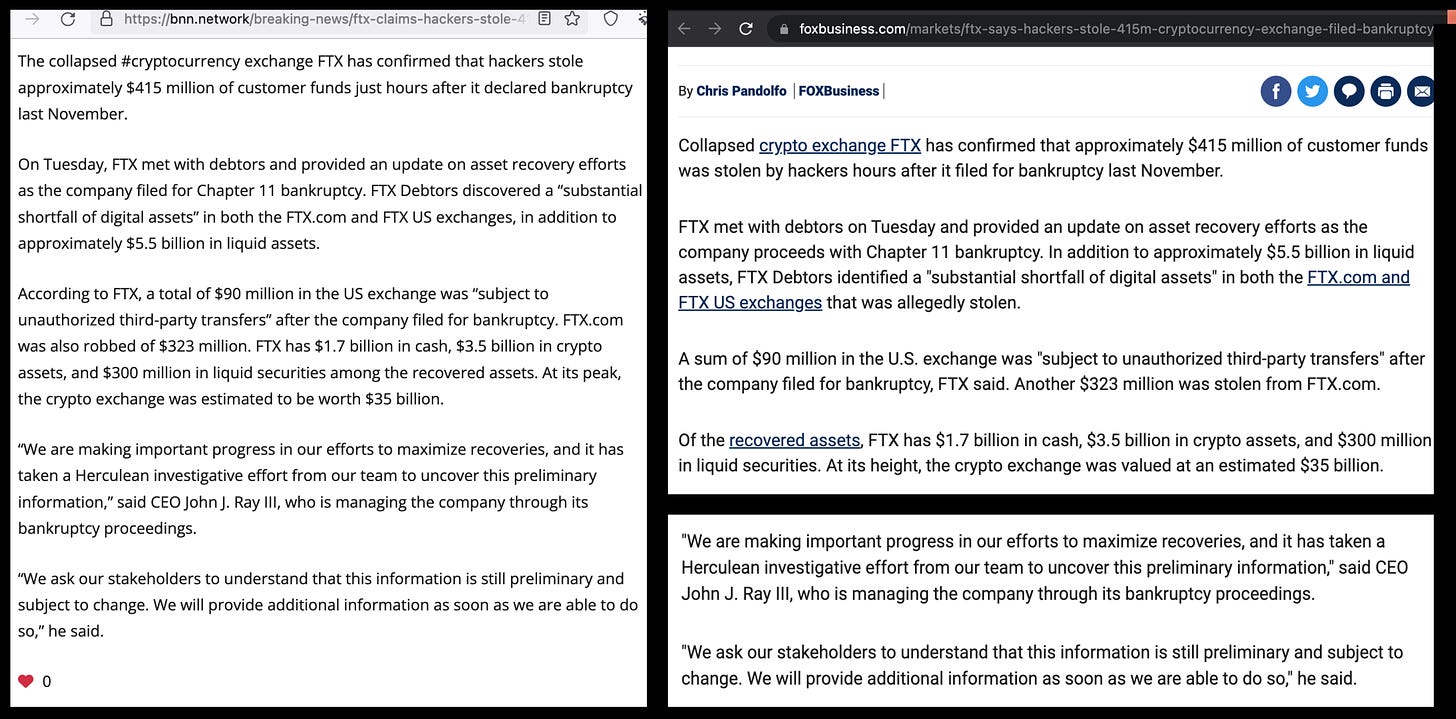

BNN (the "Breaking News Network", a news website operated by tech entrepreneur and convicted domestic abuser Gurbaksh Chahal) allegedly offers independent news coverage from an extensive worldwide network of on-the-ground reporters. As is often the case, things are not as they seem. A few minutes of perfunctory Googling reveals that much of BNN's "coverage" appears to be mildly reworded articles copied from mainstream news sites. For science, here’s a simple technique for algorithmically detecting this form of copying.

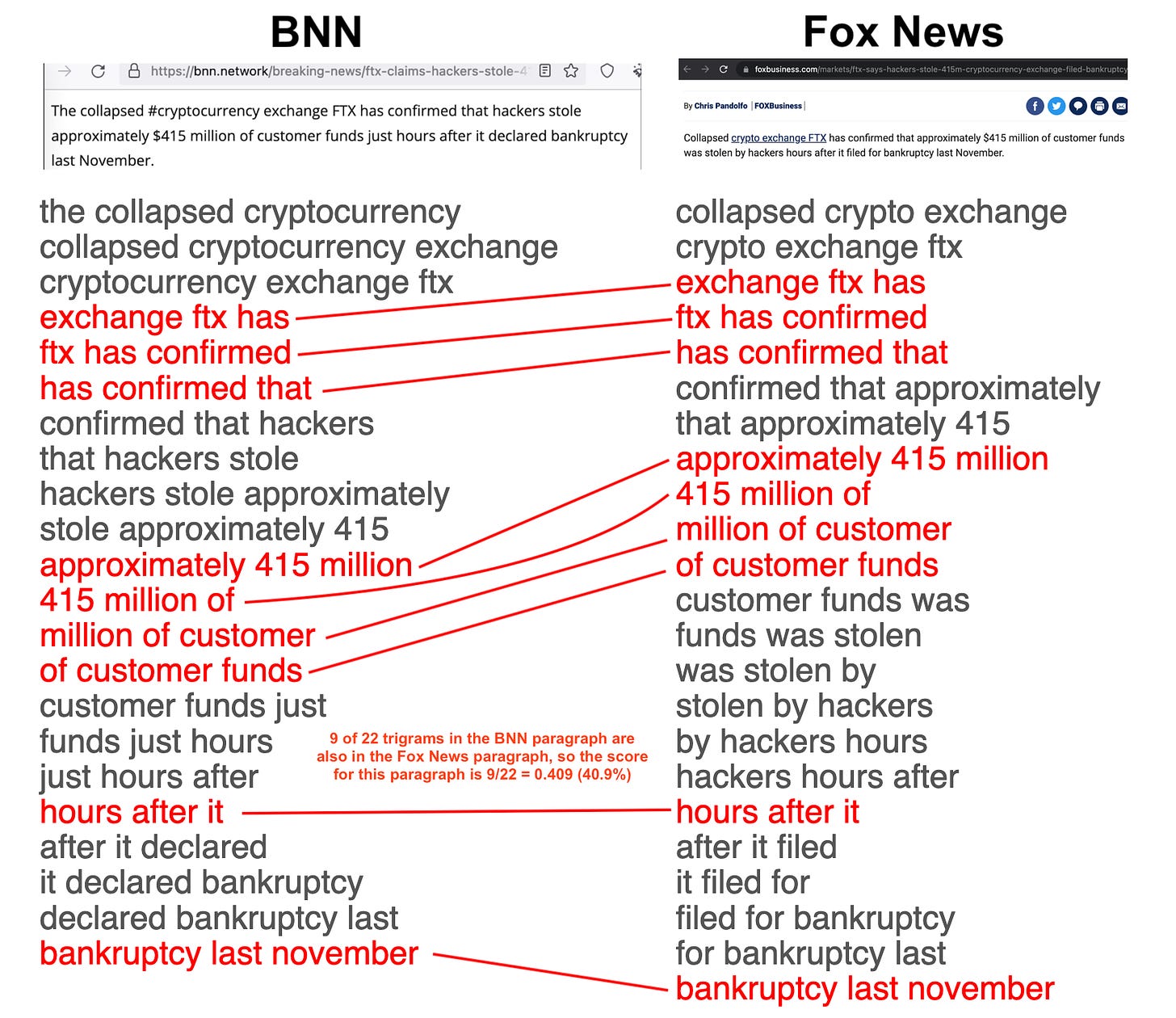

Many of the articles on the BNN website appear to have been created by copying and rewording individual paragraphs from articles published on major media sites. Much of the original language is preserved, however, and this can be detected in a variety of ways. One (extremely simple but reasonably effective) algorithm is as follows:

Split each article (both the BNN articles and the articles being compared to) into paragraphs, convert to lowercase, and eliminate all punctuation so that each paragraph becomes a list of consecutive words.

Convert each paragraph into the set of trigrams (sequences of three consecutive words) it contains. (N-grams of other lengths work as well.)

To compare two articles, compare the set of trigrams in every paragraph in the first article with the set of trigrams in every paragraph in the second, calculating the score for that pair of paragraphs as the percentage of trigrams in the first paragraph that are also present in the second. The pair of articles is considered a match if the average of the highest score for each paragraph in the first article is at least 25%, and no paragraph in the first article has a highest score of 0%.

The major media sites included in this analysis are Reuters, AP (Associated Press), Fox News (including Fox Business), TASS, and the BBC. To gather article text from these websites (as well as BNN itself), Wayback Machine, Archive.today, and Twitter search results were used to compile a list of unique article URLs for each site. The archive sites were also used to harvest the text of (most of) the articles as raw HTML, which was then parsed into paragraphs and stored in CSV format using the following Python code (apologies for the formatting):

import bs4

import os

import pandas as pd

import sys

path = sys.argv[1]

out_path = sys.argv[2]

def bnn (soup, url):

elements = soup.find_all ("div", {"class" : "post-content"})

if len (elements) == 1:

rows = []

elements = elements[0].find_all ("p")

pos = 0

times = soup.find_all ("time", {"class" : "post-date"})

t = times[0]["datetime"].replace ("T", " ")

for element in elements:

text = element.text.strip ()

rows.append ({"url" : url,

"text" : text,

"paragraphID" : pos,

"paragraph" : pos,

"utcTime" : t})

pos = pos + 1

return rows

else:

return None

def reuters (soup, url):

times = soup.find_all ("time")

if times is None or len (times) == 0:

return None

if times[0].has_attr ("datetime"):

t = times[0]["datetime"]

else:

spans = times[0].find_all ("span")

if len (spans) == 4:

t = spans[1].text + " " + spans[2].text

elif len (spans) == 1:

t = spans[0].text

else:

t = times[0].text

elements = soup.find_all ("p")

pos = 0

rows = []

for element in elements:

para = None

if element.has_attr ("data-testid"):

test = element["data-testid"]

if test.startswith ("paragraph-"):

para = int (test.replace ("paragraph-", ""))

elif element.has_attr ("id"):

test = element["id"]

if test.startswith ("paragraph-"):

para = test.replace ("paragraph-", "")

elif element.has_attr ("class") and element["class"][0].startswith ("Paragraph-paragraph"):

para = pos

if para is not None:

rows.append ({"url" : url,

"text" : element.text,

"paragraphID" : para,

"paragraph" : pos,

"utcTime" : t})

pos = pos + 1

return rows

def ap_news (soup, url):

times = soup.find_all ("span", {"data-key" : "timestamp"})

if len (times) == 1:

t = times[0]["data-source"]

else:

return None

articles = soup.find_all ("div", {"class" : "Article"})

if len (articles) == 0:

articles = soup.find_all ("article")

if len (articles) != 1:

return None

else:

elements = articles[0].find_all ("p", recursive="false")

rows = []

pos = 0

for element in elements:

rows.append ({"url" : url,

"text" : element.text,

"paragraphID" : pos,

"paragraph" : pos,

"utcTime" : t})

pos = pos + 1

return rows

def fox (soup, url):

times = soup.find_all ("time")

if times is None or len (times) == 0:

return None

if times[0].has_attr ("datetime"):

t = times[0]["datetime"]

else:

t = times[0].text

articles = soup.find_all ("div", {"class" : "article-body"})

if len (articles) != 1:

return None

else:

elements = articles[0].find_all ("p", recursive="false")

rows = []

pos = 0

for element in elements:

rows.append ({"url" : url,

"text" : element.text,

"paragraphID" : pos,

"paragraph" : pos,

"utcTime" : t})

pos = pos + 1

return rows

def tass (soup, url):

times = soup.find_all ("dateformat")

if times is None or len (times) == 0:

print ("no time: " + url)

return None

else:

t = times[0]["time"]

articles = soup.find_all ("div", {"class" : "text-block"})

if len (articles) != 1:

print ("articles " + str (len (articles)) + " " + url)

return None

else:

elements = articles[0].find_all ("p", recursive="false")

rows = []

pos = 0

for element in elements:

rows.append ({"url" : url,

"text" : element.text,

"paragraphID" : pos,

"paragraph" : pos,

"utcTime" : t})

pos = pos + 1

return rows

def bbc (soup, url):

times = soup.find_all ("time")

if times is None or len (times) == 0:

t = ""

elif times[0].has_attr ("datetime"):

t = times[0]["datetime"]

else:

t = times[0].text

articles = soup.find_all ("article")

if len (articles) != 1:

articles = soup.find_all ("main")

if len (articles) != 1:

return None

else:

elements = articles[0].find_all ("div", {"dir" : "ltr"},

recursive=False)

if len (elements) == 0:

elements = articles[0].find_all ("div", {"dir" : "rtl"},

recursive=False)

rows = []

pos = 0

for element in elements:

rows.append ({"url" : url,

"text" : element.text,

"paragraphID" : pos,

"paragraph" : pos,

"utcTime" : t})

pos = pos + 1

else:

elements = articles[0].find_all ("div",

{"data-component" : "text-block"},

recursive=False)

rows = []

pos = 0

for element in elements:

rows.append ({"url" : url,

"text" : element.text,

"paragraphID" : pos,

"paragraph" : pos,

"utcTime" : t})

pos = pos + 1

return rows

parsers = {

"bnn.network" : [bnn, "BNN"],

"reut.rs" : [reuters, "Reuters"],

"www.reuters.com" : [reuters, "Reuters"],

"apnews.com" : [ap_news, "AP"],

"www.foxbusiness.com" : [fox, "Fox News"],

"www.foxnews.com" : [fox, "Fox News"],

"tass.com" : [tass, "TASS"],

"bbc.com" : [bbc, "BBC"],

"www.bbc.com" : [bbc, "BBC"],

}

data = {}

errors = {}

domains = []

for f in os.listdir (path):

domain = f[:f.find ("_")]

url = "https://" + f.replace ("_", "/")

domains.append (domain)

if domain in parsers:

parser = parsers[domain]

try:

with open (path + f, "r") as file:

html = file.read ()

soup = bs4.BeautifulSoup (html)

result = parser[0] (soup, url)

if result is not None:

if len (result) == 0:

print ("zero paragraphs: " + url)

if parser[1] in data:

data[parser[1]].extend (result)

else:

data[parser[1]] = result

else:

print ("error parsing: " + url)

if parser[1] in errors:

errors[parser[1]] = errors[parser[1]] + 1

else:

errors[parser[1]] = 1

except:

print ("unknown error: " + f)

for site in data.keys ():

print (site)

df = pd.DataFrame (data[site])

print (str (len (set (df["url"]))) + " articles successfully parsed")

print (str (len (df.index)) + " paragraphs total")

print (str (errors[site] if site in errors else 0) + " articles with errors")

df.to_csv (out_path + site + "_content.csv", index=False)

print ("----------") From there, the parsed articles from each news outlet were compared to the parsed articles from every other outlet, with matches written to a CSV file (along with the average and-paragraph-specific scores for each pair of articles matched). Python code for the comparison process is below; the threshold for matching and the n-gram length can both be tweaked with minimal effort. (Note that this is a very basic algorithm; you can easily find papers and blog posts on a variety of plagiarism detection techniques by typing “plagiarism detection” into your search engine of choice.)

import os

import pandas as pd

import re

import sys

import time

def parse_words (text):

return re.split (r"\W+", text.lower ())

def get_phrases (article, window=3, min_words=10):

if "phrases" in article:

return article["phrases"]

phrases = []

for text in article["paragraphs"]:

if len (text) >= min_words:

phrases.append (set ([" ".join (text[i:i + window]) \

for i in range (len (text) - window + 1)]))

article["phrases"] = phrases

return phrases

def phrase_similarity (phrases1, phrases2):

if len (phrases1) == 0 or len (phrases2) == 0:

return None

common = len (set.intersection (phrases1, phrases2))

return common / len (phrases1)

def compare_articles (article1, article2):

scores = []

for phrases1 in get_phrases (article1):

score = 0

for phrases2 in get_phrases (article2):

pscore = phrase_similarity (phrases1, phrases2)

if pscore is not None:

score = max (pscore, score)

scores.append (score)

return scores

def compare_article_sets (site1, site2, articles1, articles2,

cutoff=0.2, max_zeroes=0):

matches = []

for article1 in articles1:

for article2 in articles2:

scores = compare_articles (article1, article2)

total = 0

zeroes = 0

for score in scores:

if score == 0:

zeroes = zeroes + 1

else:

total = total + score

score = 0 if len (scores) == 0 else sum (scores) / len (scores)

if score >= cutoff and zeroes <= max_zeroes:

matches.append ({"site1" : site1,

"site2" : site2,

"article1" : article1["url"],

"article2" : article2["url"],

"scores" : scores,

"score" : score})

print ("potential match "+ str (scores) + " " + article1["url"] + " <- " + article2["url"])

return matches

def count_and_sort (df, columns):

g = df.groupby (columns)

return pd.DataFrame ({"count" : g.size ()}).reset_index ().sort_values (

"count", ascending=False).reset_index ()

start_time = time.time ()

data = {}

path = sys.argv[1]

site1 = sys.argv[2]

out_file = sys.argv[3]

for f in os.listdir (path):

df = pd.read_csv (path + f)

df["text"] = df["text"].fillna ("")

site = f.replace ("_content.csv", "")

articles = []

for url in set (df["url"]):

articles.append ({"url" : url,

"paragraphs" : [parse_words (text) for text in \

df[df["url"] == url].sort_values ("paragraph")["text"]]})

data[site] = articles

print (site + ": " + str (len (articles)))

matches = []

sites = set (data.keys ())

for site2 in sites:

if site1 != site2:

print (site1 + " : " + site2)

matches.extend (compare_article_sets (site1, site2, data[site1], data[site2]))

print (str (len (matches)) + " potential matches")

df = pd.DataFrame (matches)

df = df.sort_values ("score", ascending=False)

df = df[["site1", "site2", "score", "scores", "article1", "article2"]]

print (str (len (df.index)) + " rows")

print (count_and_sort (df, ["site1", "site2"]))

for site in sites:

print (site + ": " + str (len (set (df[df["site1"] == site]["article1"]))) + " articles match")

df.to_csv (out_file, index=False)

print (str (time.time () - start_time) + " seconds")

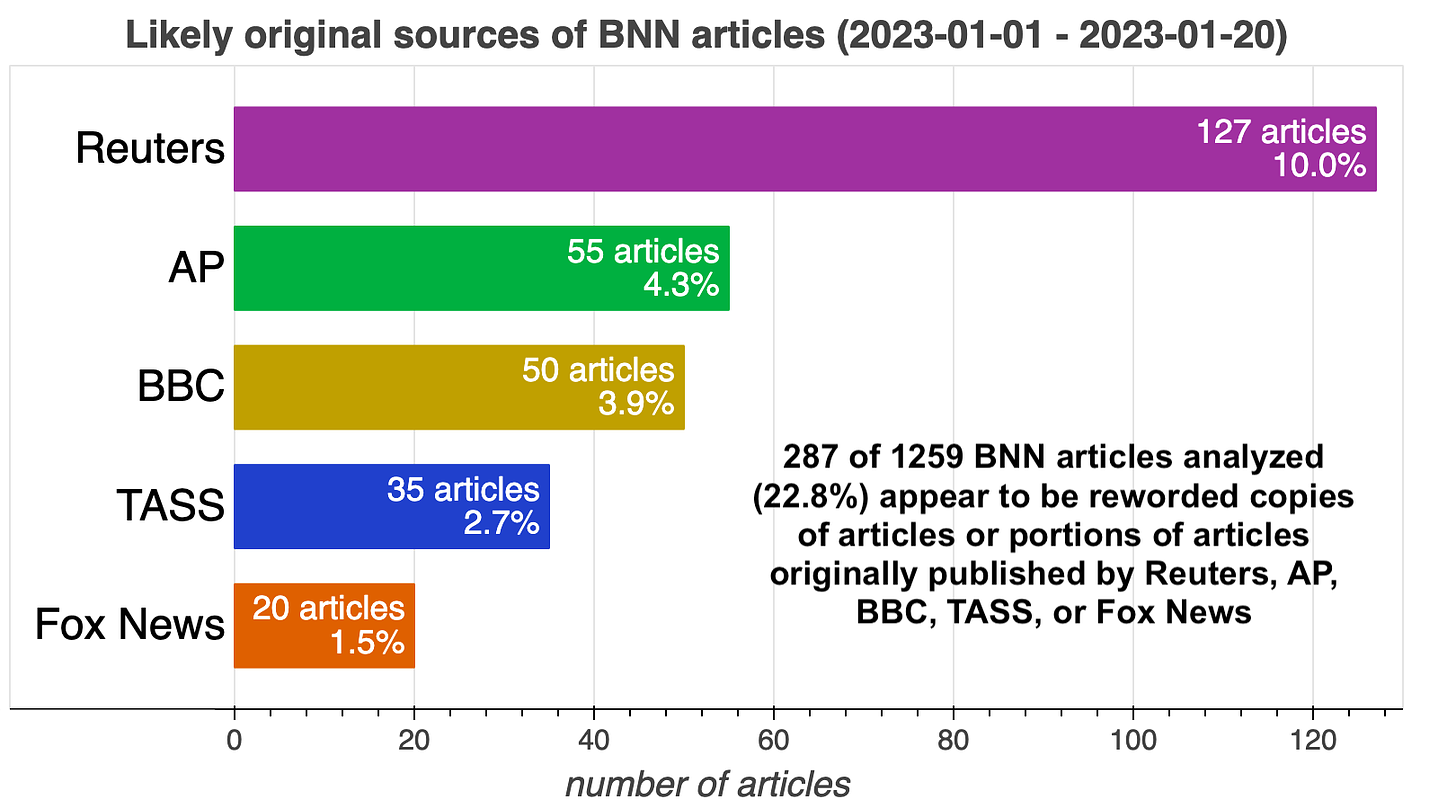

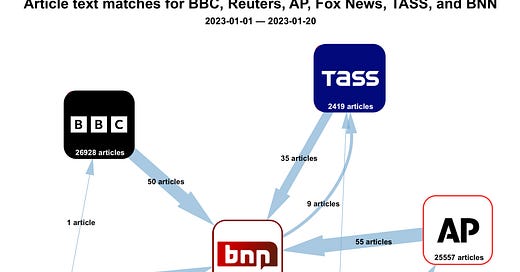

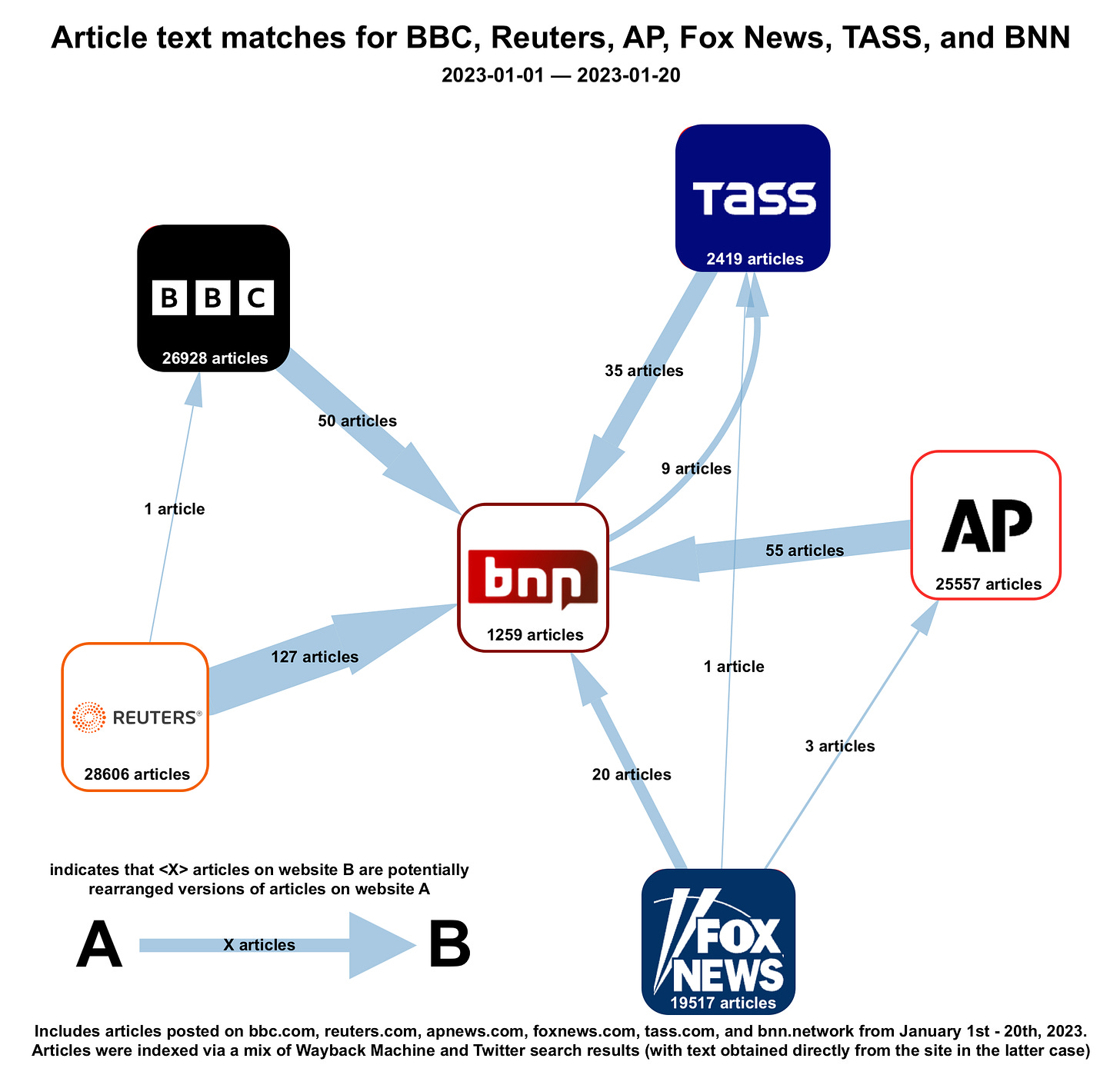

Results: 287 of 1259 BNN articles analyzed (22.8%) appear to have been copied from one of these five sites (Reuters, AP, Fox News, TASS, and the BBC). This is likely just the tip of the iceberg in terms of the total amount of non-original content on the site; in most cases, simply plugging a sentence or two from a BNN article into a search engine leads to an article on an established news site from which the BNN article was likely copied and rephrased. A major exception to this rule is articles about BNN itself (or its founder Gurbaksh Chahal), all of which seem to be original material.

As a sanity check, the major outlets were compared to each other (as well as BNN) using the same algorithm used to compare BNN to the major outlets. The results were markedly different: only ~0.01% of articles in the mainstream outlets were flagged as potentially copied, as opposed to 22.8% of the BNN articles.

Seems like Chahal lost the plot somewhere between savagely beating his ex-girlfriend and interviewing Steven Jarvis as source of truth. You hate to see it.

Really liked the debunk, there are writers who writes 1000 articles per hour updated in seconds how come this happens, i was wondering all this is ai content and assigned to author.