Scraping public GoFundMe donation data

How to write a simple Python script to scrape the most recent 1000 donations from any GoFundMe fundraiser

The crowdfunding platform GoFundMe currently makes the most recent 1000 donations to each fundraiser publicly available via a scrollable list. (Private payment-related information is not available, but a user’s public display name, as well as the amount and time of the donation, are.) Modern web platforms such as GoFundMe all work basically the same way — the user interface communicates with the site’s backend via REST (REpresentational State Transfer) web services. It is often possible to scrape publicly available user-generated content, such as a user’s social media posts or a list of fundraiser donations, by calling these web services directly. Here’s an overview of how to use this technique to write a simple GoFundMe donation scraper in Python.

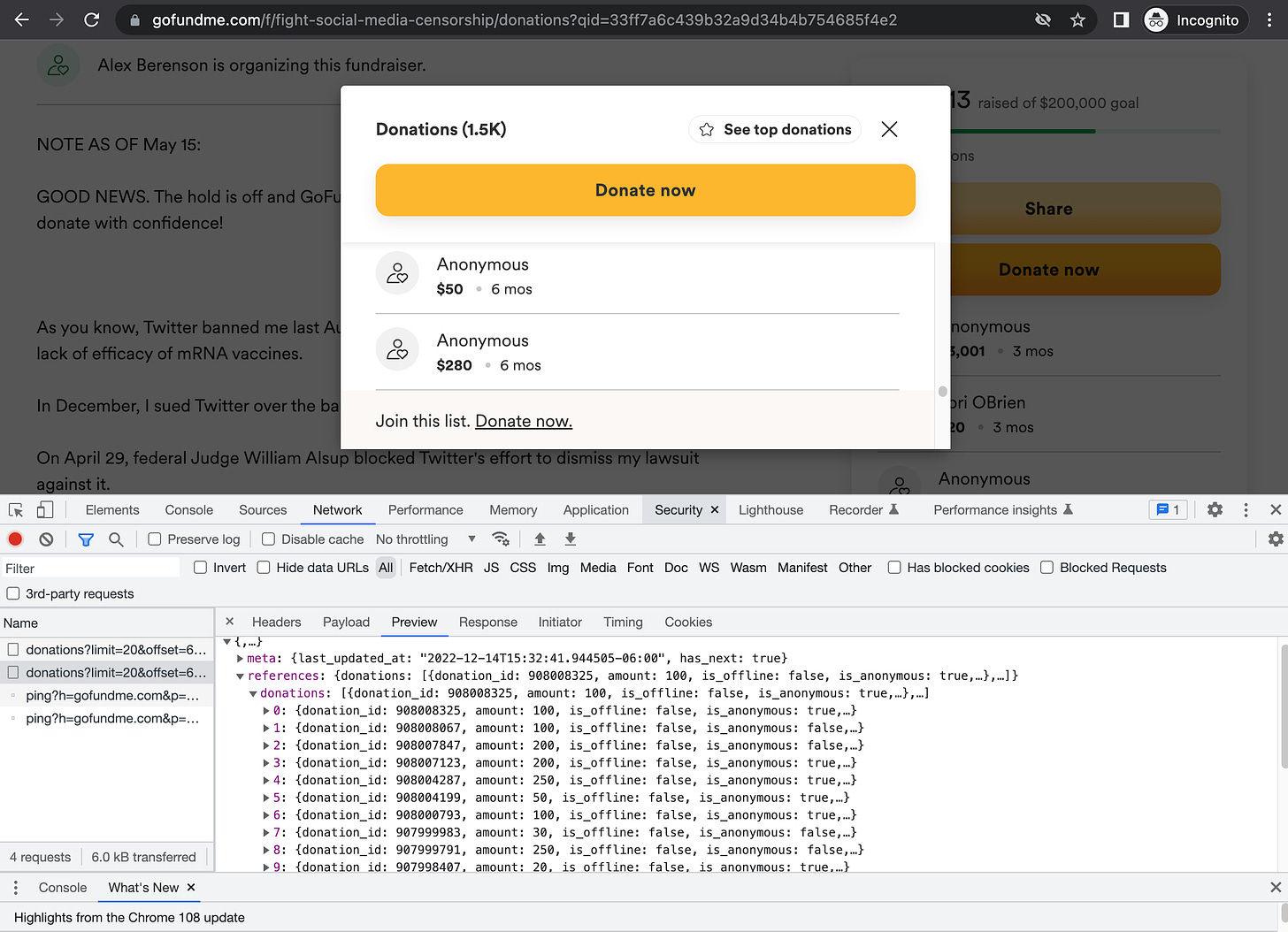

We can use the built-in developer tools included with most major browsers to peek under the hood and figure out how the web services underlying a given platform, such as GoFundMe, work. In Google Chrome (shown above), this is done by opening the “Developer Tools” under the “Developer” section of the “View” menu and clicking the “Network” tab; other browsers have similar features.

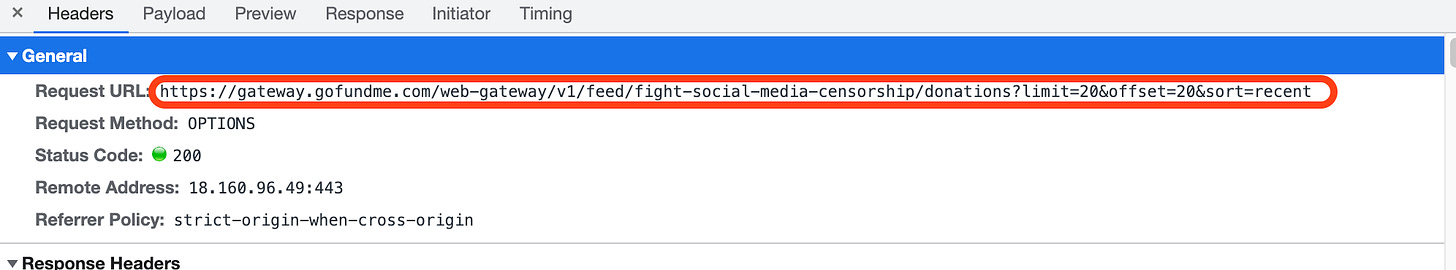

Here we can inspect the requests sent to and the responses returned by the platform’s underlying web services. Generally, the responses will be in JSON (JavaScript Object Notation) format, which is easily parsed by most modern programming languages, including Python. In the case of GoFundMe, the donation list is retrieved by calling https://gateway.gofundme.com/web-gateway/v1/feed/<fundraiser_name>/donations?limit=<X>&offset=<Y>&sort=recent, and the returned JSON contains a references dictionary which in turn contains a donations list of dictionaries that contain the donations and associated metadata. The maximum value for the limit parameter (which controls the number of donations returned at a time) currently appears to be 100, so in order to get the full list of the 1000 most recent donations, we have to call it repeatedly (up to 10 times).

Here’s an example of how to retrieve the donations for a given GoFundMe fundraiser in Python:

# simple python script to retrieve the most recent 1000 donations

# from any GoFundMe fundraiser

#

# this is intended as a simple example of how to scrape public

# data from websites that use REST webservices under the hood

import json

import pandas as pd

import requests

import sys

def read_gofundme_donations (name):

stem = "https://gateway.gofundme.com/web-gateway/v1/feed/" \

+ name + "/donations?limit=100&sort=recent"

done = False

pos = 0

rows = []

while not done:

url = stem if pos == 0 else stem + "&offset=" + str (pos)

print (url)

r = requests.get (url)

new = json.loads (r.text)["references"]["donations"]

if len (new) == 0:

done = True

else:

rows.extend (new)

pos = pos + len (new)

df = pd.DataFrame (rows).drop_duplicates ("donation_id")

print (str (len (df.index)) + " rows")

df["created_at"] = pd.to_datetime (df["created_at"])

return df.sort_values ("created_at", ascending=False)

if __name__ == "__main__":

df = read_gofundme_donations (sys.argv[1])

df.to_csv (sys.argv[2], index=False)The above code will retrieve the most recent 1000 donations for the selected fundraiser (or all of the donations, if the fundraiser has received 1000 or fewer donations). The results are stored in a CSV file and include columns for the donation amount, the time of the donation, the display name of the GoFundMe user that made the donation (or “Anonymous” if the user is anonymous), the currency used, the unique donation ID, and a few other attributes. This is a basic example and various enhancements are possible (for example, GoFundMe also provides a list of the largest donations that sometimes includes donations prior to the most recent 1000 limit).

The techniques described here are not limited to GoFundMe. As mentioned earlier, most modern platforms that host user-generated content have a similar architecture, and variations on the methods shown here should be generally applicable, although sites such as Facebook or Instagram that require a login to meaningfully explore public content are a bit trickier to work with. Note that all tools for web scraping will sometimes require bug fixes when the operators of a platform add or remove features, redesign the user interface, or make other changes.

Is there any methods to get the donations record beyond 1000?

OK, but what possible use would this have?