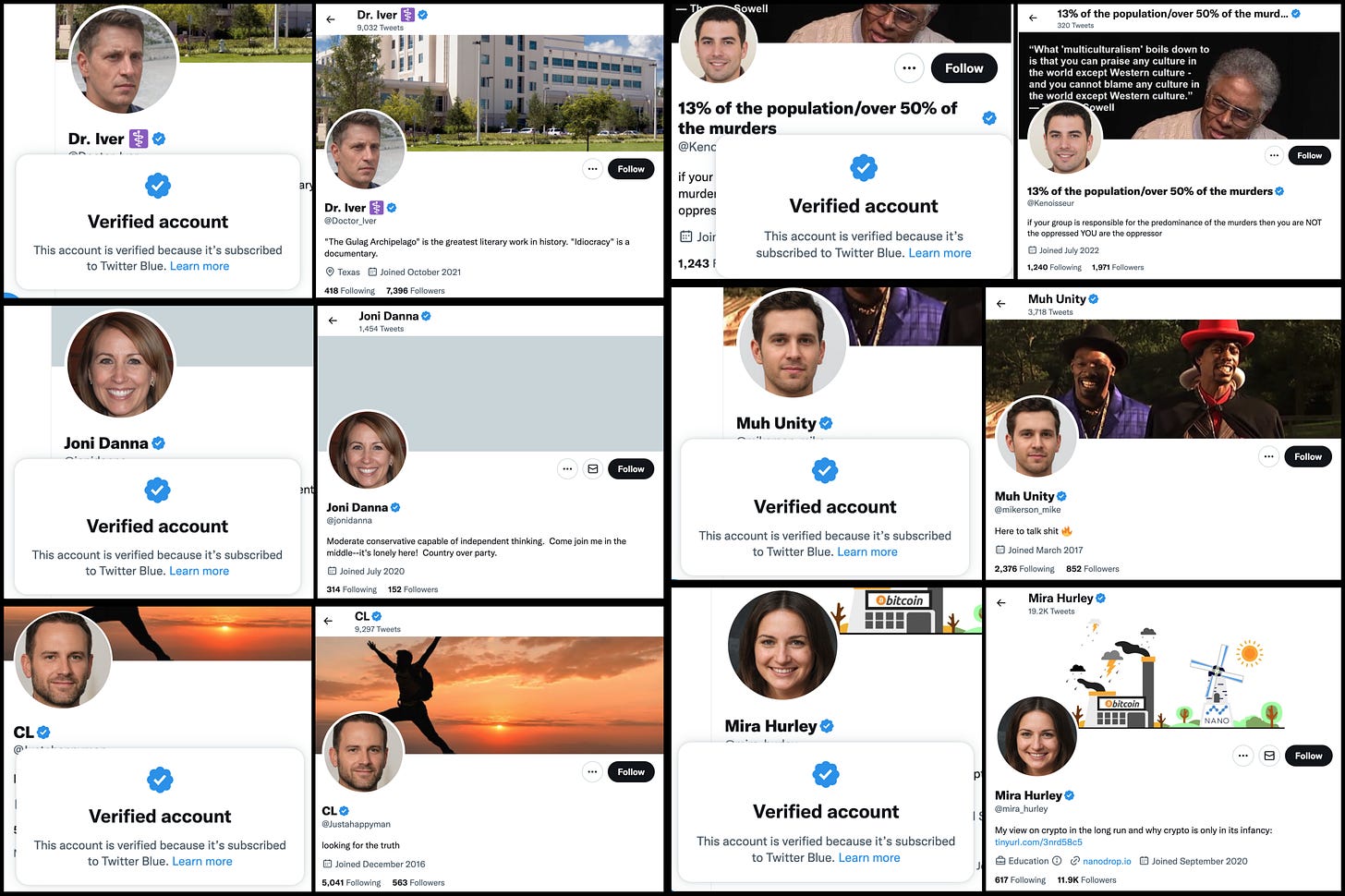

Inauthentic activity and Twitter Blue verification

When "verification" boils down to "someone has a phone and paid $8", it's not surprising that spam and fake accounts slip through the cracks

One of the highly-touted benefits of the Twitter Blue paid verification feature is an alleged reduction in spam and inauthentic activity. While the underlying assumption that adding the requirement for a phone number and a monthly payment create friction to certain types of inauthentic activity is accurate (spinning up large numbers of “verified” spam accounts requires deep pockets), creating smaller numbers of inauthentic accounts with blue checkmarks remains relatively easy, especially since the Twitter Blue verification feature currently does not require proof of identity. Additionally, the policy of giving algorithmic priority to replies from Twitter Blue accounts gives spammers whose replies would previously have been buried the power to push them to the top of a given tweet’s comment section instead.

Cryptocurrency reply spam similar to that shown in the above image has been an issue on Twitter (and other platforms) for several years. Prior to the introduction of the Twitter Blue verification feature, this spam has generally come from large swarms of batch-created and hijacked accounts (frequently automated), with the occasional hacked celebrity account thrown in for good measure. Likely due to the repetitive nature of the replies, Twitter’s spam detection systems did a decent (albeit far from perfect) job of relegating these replies to the bottom of the comments section.

Twitter Blue changes the situation. On April 25th, 2023, Twitter CEO Elon Musk announced that “[Twitter Blue] verified accounts are now prioritized”. Almost immediately, cryptocurrency spammers began taking advantage of this boost to bestow prominence on their repetitive replies, which began showing up at or near the top rather than being relegated to the bottom or hidden entirely. Many of these blue check reply spam accounts also have large flocks of fake followers, which may be another attempt to confer unwarranted legitimacy upon them. This particular group of reply spam accounts was removed by Twitter roughly two days after being publicly documented, but similar accounts continue to be created.

Another ongoing issue with the Twitter Blue verification system: when one removes the identity verification aspect of “verification”, one opens the door to “verifying” fake personas. For example, blue check accounts with GAN-generated faces have repeatedly popped up ever since the new verification system was unveiled. Although it’s possible the intent of some of these accounts is anonymity rather than deception, the combination of the blue check mark (interpreted by many people as a sign of authenticity) with the lack of disclosure of the artificial nature of the image makes misleading use easy. (Inauthenticity should not be confused with anonymity here, as it is entirely possible to design and implement a verification mechanism wherein Twitter internally verifies personal identity, professional credentials, or organizational affiliation while allowing the user to remain publicly anonymous.)

In conclusion, three observations about the current state of Twitter Blue verification:

Despite the financial barrier of paying $8 per month per account, deceptive and malicious use of Twitter Blue remains feasible and is happening.

The $8 a month is not a get-out-of-jail free card when it comes to spam and platform manipulation. (Blue check reply spammers were suspended quickly once documented and reported as spam.)

Things are a bit more nebulous when it comes to paying $8 to put a blue check on a potentially inauthentic account — in addition to the accounts with GAN-generated faces mentioned above, an account that posts deepfake videos, has tens of thousands of fake followers, and has been renamed and redecorated multiple times currently boasts a Twitter Blue checkmark (@ThePatriotOasis).

Does Twitter have a "mass report" feature for reporting fake/bot accounts? Creating one off reports is too cumbersome and most users ignore rather than report.

There are obvious challenges to a mass reporting tool, and you can't put the same weight of a report of mass spam as a regular report. But I think if you used two different pipelines, it would be fairly easy for twitter to verify and at least delete all similar accounts (low follower/tweet counts). Much more quickly than relying on the public to individually report each account for spam.

I'm just exceptionally jaded with Twitter I've deactivated my account. I'm trying to be more active here and discovering valuable newsletters to subscribe to. I'm not saying Substack is a perfect replacement or anything, and I don't expect I'll be posting much on Notes either, but it's a whole lot less noisy here.