Generative AI and the liar's dividend

As synthetic images and video grow in sophistication, attempts to portray real events as fake and fake events as real will both proliferate

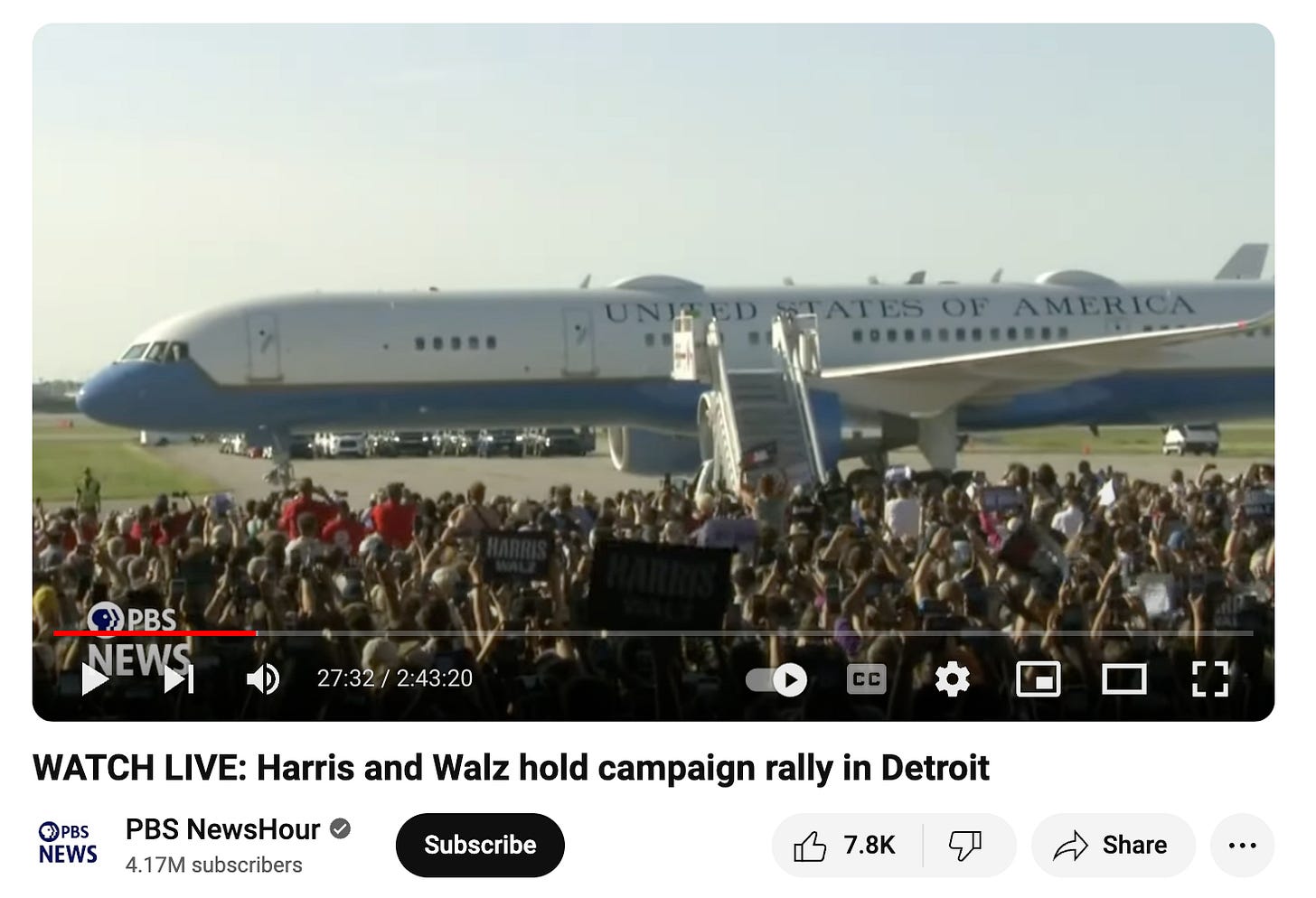

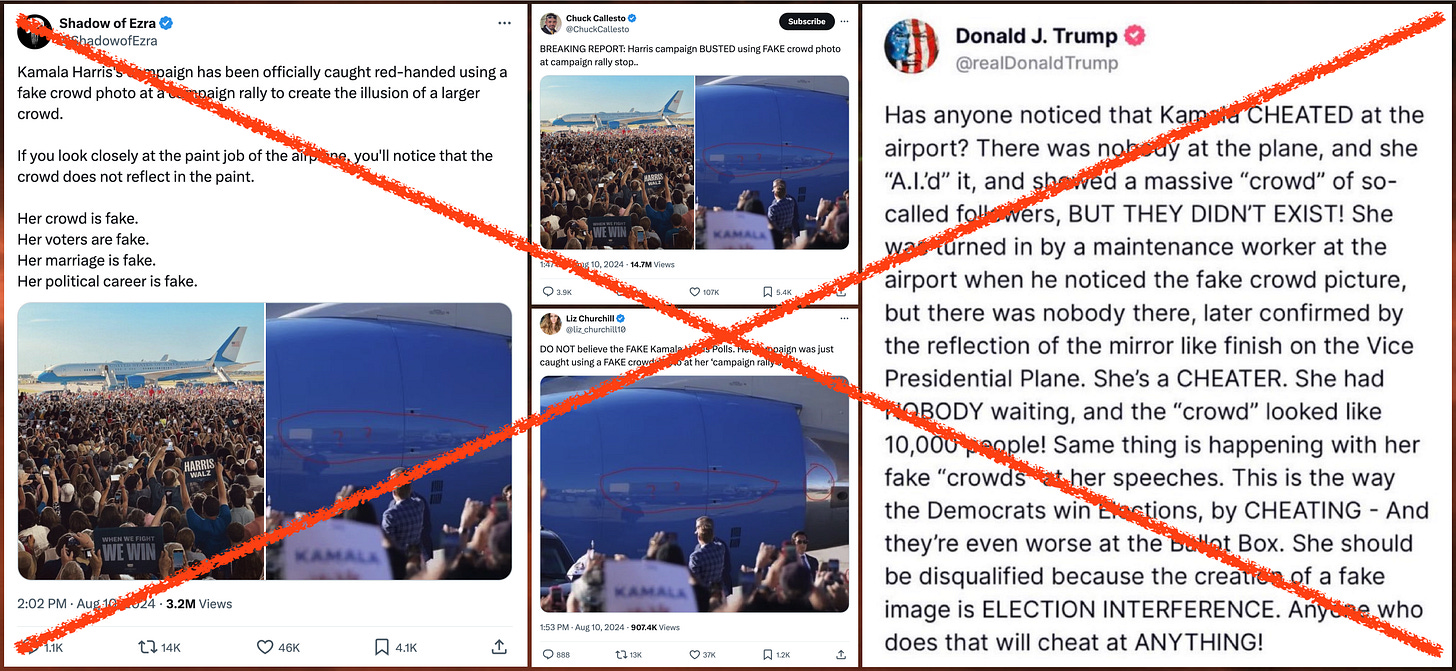

On August 10th, 2024, several prominent X accounts posted a false claim that a photo of a crowd of supporters at a Detroit rally for Democratic presidential candidate Kamala Harris was faked, possibly via generative AI tools. This assertion, which was based in part on faulty forensic analysis of the photograph in question, appears to have originated with right-wing influencer Chuck Callesto. The falsehood was picked up in short order by Republican candidate Donald Trump, who repeated and embellished it on his Truth Social account..

Situations of this sort, wherein photographs, audio, and video of real events are misrepresented as deepfakes, will only become more common as the technology to generate synthetic media grows increasingly powerful. In such an environment, there is no single magic approach to discerning fact from digital fiction; instead, a variety of methods of evaluating evidence and a broader awareness of context is required. This article briefly examines four different scenarios:

A real image misrepresented as fake (the Detroit Harris rally photo)

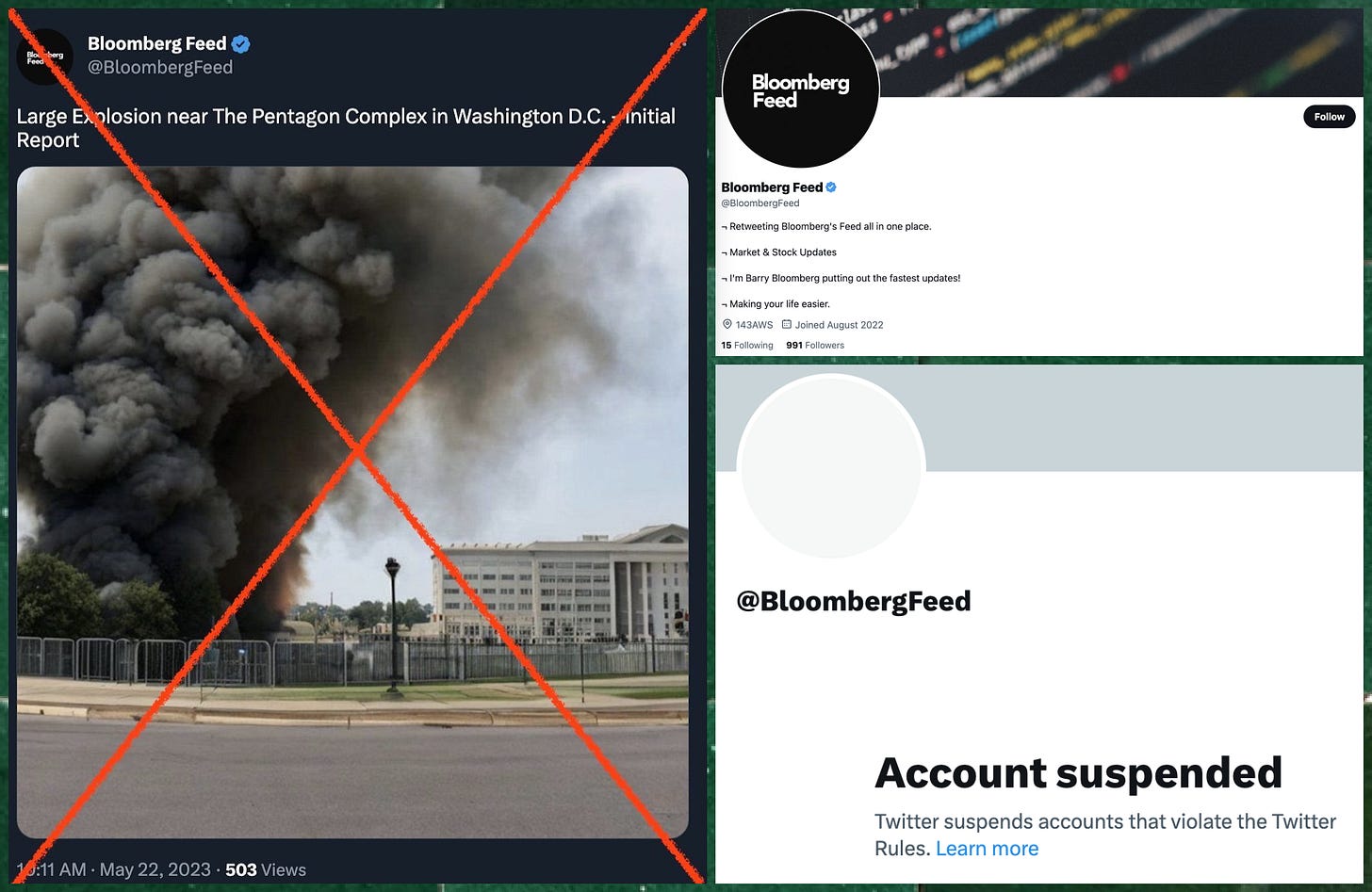

A fake image misrepresented as real (an AI-generated image of an explosion in Washington, DC)

A fake image posted for entertainment purposes (an AI-generated image of a Harris rally that was disclosed as fake by the person who posted it)

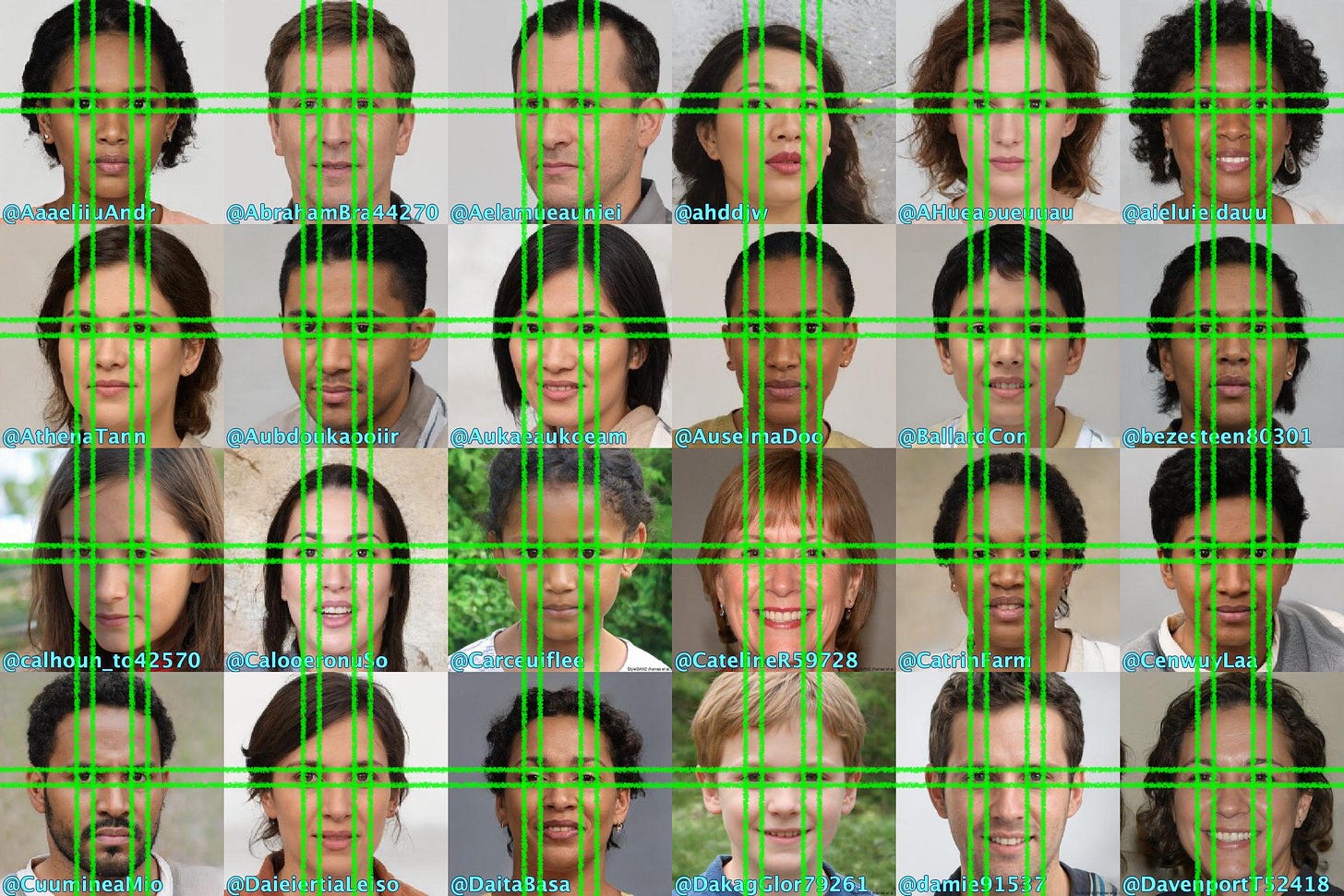

A set of fake images used to create the illusion of a group of people (a network of X accounts with AI-generated faces)

The false claims regarding the photo of the Detroit Kamala Harris rally are an example of a genuine photo being misrepresented as fake. Searching for video of this rally turns up footage of the entire event from multiple news organizations, along with a variety of shorter clips. The early portion of a video of the event posted to YouTube by PBS shows the crowd from multiple angles, followed by the plane pulling into the position in which it appears in the allegedly suspicious image. A number of news organizations posted additional photographs from a variety of vantage points as well, corroborating the existence of the airport rally and the associated crowd. The only alleged anomaly in the image (the lack of a reflection of the crowd in one of the airplane’s engines) is the result of a misunderstanding of how reflections and curves surfaces work, and is easily dispelled when the aircraft is seen in motion. This phenomenon, in which dishonest actors exploit the public’s lack of trust in the information environment to falsely portray real events as fake, has been referred to as the “liar’s dividend”.

The inverse situation, wherein a fake image is presented as real, has of course happened on numerous occasions. One such event took place on May 22nd, 2023, when multiple X accounts posted an alleged photograph of an explosion near the Pentagon in Washington, DC, briefly impacting the stock market. The image contains several visual anomalies that call its veracity into question, but expertise in image forensics is not required to debunk it. Rather, the total absence of video, eyewitness accounts, or reporting from journalists on the aftermath is sufficient to demonstrate that the alleged explosion is vanishingly unlikely to have occurred.

Not all uses of fake images are intentional acts of deception. On August 10th, 2024, X user @Sarcasmcat24 posted an AI-generated image of a Kamala Harris rally, and followed it up with a post confirming that the image had been generated with AI and visually highlighting some of the more absurd errors present therein. Although several right wing users attempted to spin this as an attempt at deception by the Harris campaign, the context (and the account’s history of posting obvious satire) makes it clear that the image was posted as a joke and has no discernible connection to any political operation.

Social media astroturf operations that use large numbers of accounts with AI-generated faces to create the illusion of a large group of people have been common for several years now. (The image above contains examples from an X spam network that posted the phrase “You did it magnificently!” over and over.) Much of the discussion of misleading uses of AI-generated media has focused on detection via anomalies in the output, such as the telltale eye placement seen in the StyleGAN-generated face images above, the extra fingers commonly seen in output from models such as Stable Diffusion, or the difficulties various image and video generators have with visual elements such as fabric and text.

While tricks of this sort are useful when confronted with synthetic media generated with known tools, they don’t generalize well, and they also tend to lead to false positives when people mistake benign artifacts (such as those produced by JPEG/MPEG compression) for indicators of inauthenticity. In cases where these techniques do work, additional analysis of the context is still necessary to establish whether or not the synthetically generated media is actually being used in a deceptive manner.

The takeaway from all of the above: in a world where technical tools that can fool the senses grow ever more powerful, awareness of context and the capacity to combine a variety of pieces of evidence from a variety of sources is and will continue to be more important than any given forensic trick for identifying fake media. We’re still (probably) far away from immersive simulations such as Star Trek’s holodecks, but the technology for creating convincing illusions will only get better with time. This creates fertile ground not only for manufacturing convincing falsehoods, but also for falsely construing real events as illusions, as Callesto and Trump attempted to do with the Detroit Harris rally.

Interesting how these tweets immediately get amplified by major accounts, Trump, Dinesh, etc. They seem to be aware in this case (others too) when they will be posted and by whom so they can quickly amplify them. Almost impossible for them to randomly see from rando Twitter accounts unless they are clearly a part of the scheme itself. Especially when this is not the first time they’ve done this. They specifically know who will post them, where to look and at what time they will be posted so they can amplify a pre-written script to go with them.

Their lying machine is well lubricated.