Exploring a YouTube spam network with Python

A programmatic look at a set of inauthentic YouTube accounts posting #accelerationism spam

As with all social media platforms, YouTube plays host to a variety of spam accounts, and as with all social media platforms, networks of such accounts can more easily be uncovered through the use of tools that download public data from the platform in bulk. The examples in this article use the pytubefix Python library, a fork of an older and currently unmaintained library, pytube. This library does not require you to have a Google developer account or your own API keys. This article outlines the process of exploring a set of inauthentic YouTube accounts that post #Accelerationism spam.

import json

import pandas as pd

from pytubefix import Search, Channel

PREFIX = "https://www.youtube.com/watch?v="

def get_channel_handle (result):

for p in result.initial_data["engagementPanels"]:

p = p["engagementPanelSectionListRenderer"]

p = p["content"]

if "structuredDescriptionContentRenderer" in p.keys ():

p = p["structuredDescriptionContentRenderer"]

for p in p["items"]:

if "videoDescriptionHeaderRenderer" in p:

p = p["videoDescriptionHeaderRenderer"]

if "channelNavigationEndpoint" in p:

p = p["channelNavigationEndpoint"]

p = p["browseEndpoint"]

p = p["canonicalBaseUrl"]

return p[1:]

def youtube_search (query, max_results=1000):

s = Search (query)

prev = 0

while prev < len (s.results) and len (s.results) < max_results:

prev = len (s.results)

print (str (prev) + " results so far")

s.get_next_results ()

print (str (len (s.results)) + " results found")

items = []

for result in s.results:

captions = [c.json_captions for c in result.captions]

video_id = result.vid_info["videoDetails"]["videoId"]

items.append ({

"author" : result.author,

"captions" : captions,

"channel_id" : result.channel_id,

"description" : result.description,

"handle" : get_channel_handle (result),

"id" : video_id,

"keywords" : result.keywords,

"length" : result.length,

"publish_date" : str (result.publish_date),

"thumbnail_url" : result.thumbnail_url,

"title" : result.title,

"url" : PREFIX + video_id,

"views" : result.views

})

if len (items) % 10 == 0:

print (str (len (items)) + " items downloaded and parsed")

print (str (len (items)) + " items downloaded and parsed")

return items

query = "\"#accelerationism\""

out_file = "youtube_accelerationism_results.json"

items = youtube_search (query)

with open (out_file, "w") as f:

json.dump (items, f, indent=2)To find the YouTube accounts posting #accelerationism spam, we start by doing a search for “#accelerationism” using the pytubefix library. The search query is in quotes to make sure that the “#” is included, as the spam accounts frequently post the word “accelerationism” in hashtag form, while real users (at least on YouTube) who use the term usually do not. The script above automates the process of running the search and repeatedly loading more results until no additional results remain or a maximum number of results is reached. Useful fields such as the account/channel that posted the video, the title, length, description, and time of posting are extracted from each search result and stored in a text file in JSON format. At the time of this writing, this process yields 179 videos posted by 53 distinct YouTube accounts.

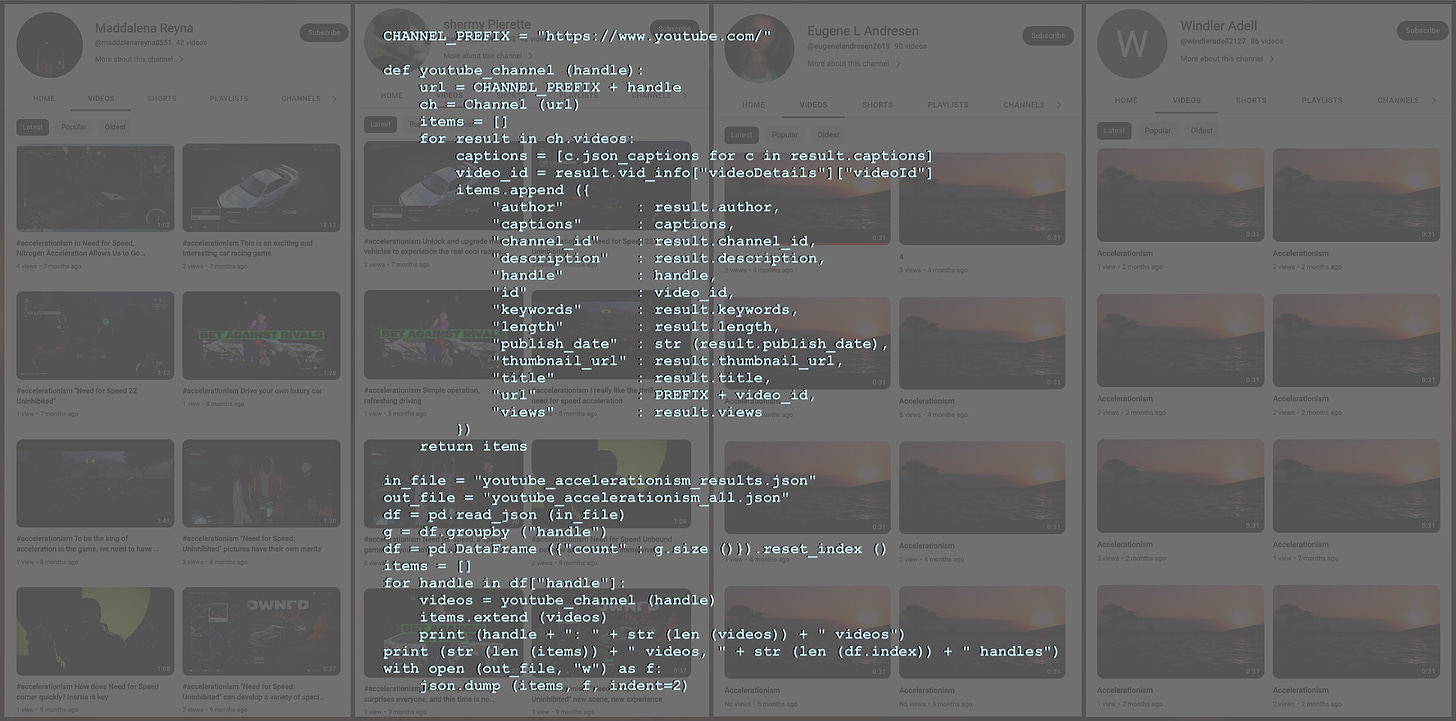

CHANNEL_PREFIX = "https://www.youtube.com/"

def youtube_channel (handle):

url = CHANNEL_PREFIX + handle

ch = Channel (url)

items = []

for result in ch.videos:

captions = [c.json_captions for c in result.captions]

video_id = result.vid_info["videoDetails"]["videoId"]

items.append ({

"author" : result.author,

"captions" : captions,

"channel_id" : result.channel_id,

"description" : result.description,

"handle" : handle,

"id" : video_id,

"keywords" : result.keywords,

"length" : result.length,

"publish_date" : str (result.publish_date),

"thumbnail_url" : result.thumbnail_url,

"title" : result.title,

"url" : PREFIX + video_id,

"views" : result.views

})

return items

in_file = "youtube_accelerationism_results.json"

out_file = "youtube_accelerationism_all.json"

df = pd.read_json (in_file)

g = df.groupby ("handle")

df = pd.DataFrame ({"count" : g.size ()}).reset_index ()

items = []

for handle in df["handle"]:

videos = youtube_channel (handle)

items.extend (videos)

print (handle + ": " + str (len (videos)) + " videos")

print (str (len (set (df["handle"]))) + " handles")

print (str (len (items)) + " videos")

with open (out_file, "w") as f:

json.dump (items, f, indent=2) Next, we download the full list of videos posted by each account that came up in the #accelerationism search. (Note: the results returned by the pytubefix library, at least presently, do not include livestreams or shorts.) Similar to the search, this process results in a JSON-formatted text file containing basic information about each video. The accounts that appeared in the #accelerationism search have posted a total of 1530 videos, but a casual glance indicates that at least a few of the accounts are false positives where a legitimate YouTube user just happened to include #accelerationism in the video description.

TERMS = ["accelerationism", "accelerationist", "accelerator",

"acceleration", "accelerating", "need for speed"

"加速师", "加速主义"]

def test (item):

text = (item["title"] + item["description"]).lower ()

for term in TERMS:

if term in text:

return True

return False

in_file = "youtube_accelerationism_all.json"

out_file = "youtube_accelerationism_spam.json"

videos = pd.read_json (in_file)

frames = []

for h in set (videos["handle"]):

df = videos[videos["handle"] == h]

df["test"] = df.apply (test, axis=1)

count = len (df.index)

accel = len (df[df["test"]].index)

ratio = accel / count

if ratio >= 0.5:

frames.append (df)

df = pd.concat (frames)

print (str (len (set (df["handle"]))) + " handles")

print (str (len (df.index)) + " videos")

with open (out_file, "w") as f:

json.dump (df.to_dict (orient="records"), f, indent=2) We can programmatically eliminate these false positives by checking the video titles and descriptions for a few keywords that are common in #accelerationism spam and only keeping accounts where at least half of the videos contain one or more of these keywords. (The exact set of criteria will vary from spam network to spam network and generally requires manual review and some trial and error to figure out.) Removal of false positives reduces the number of accounts from 53 to 43.

# generate table of accounts

in_file = "youtube_accelerationism_spam.json"

out_file = "youtube_spam_accounts.csv"

videos = pd.read_json (in_file)

g = videos.groupby (["handle", "author", "channel_id"])

df = pd.DataFrame ({

"videos" : g.size (),

"oldest" : g["publish_date"].min (),

"newest" : g["publish_date"].max ()

}).reset_index ()

df = df[["handle", "author", "channel_id", "videos",

"oldest", "newest"]]

df.sort_values (["oldest", "newest"]).to_csv (out_file, index=False)

# repetition analysis

def aggregate (df, column):

g = df.groupby (column)

counts = pd.DataFrame ({"videos" : g.size ()})

counts = counts.reset_index ()[[column, "videos"]]

g = df.groupby ([column, "channel_id"])

accounts = pd.DataFrame ({"." : g.size ()})

accounts = accounts.reset_index ()[[column, "channel_id"]]

g = accounts.groupby (column)

accounts = pd.DataFrame ({"accounts" : g.size ()})

accounts = accounts.reset_index ()[[column, "accounts"]]

counts = counts.merge (accounts, on=column)

return counts.sort_values (["videos", "accounts"],

ascending=False)

videos["title"] = videos.apply (lambda r: \

r["title"].replace (r["author"], "")[3:], axis=1)

out_file_title = "youtube_spam_titles.csv"

out_file_desc = "youtube_spam_descriptions.csv"

aggregate (videos, "title").to_csv (out_file_title, index=False)

aggregate (videos, "description").to_csv (out_file_desc, index=False)

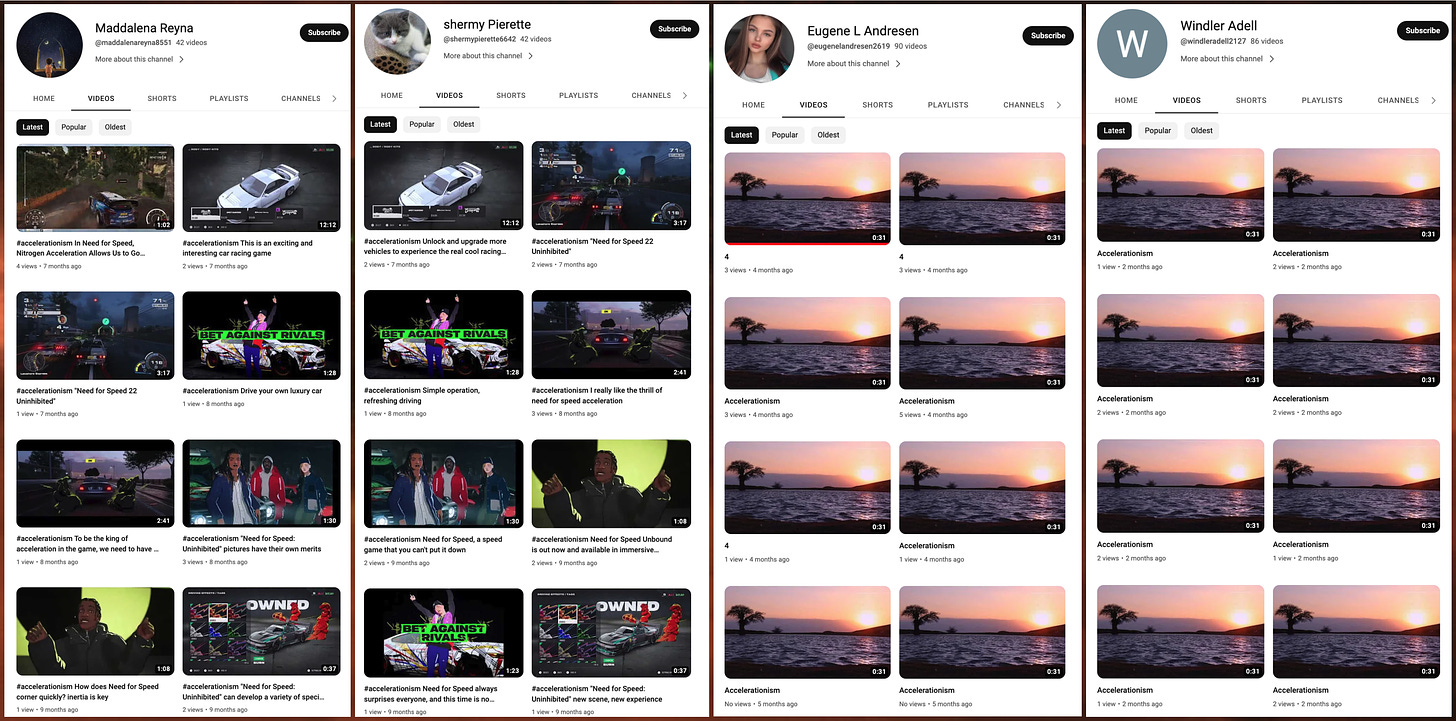

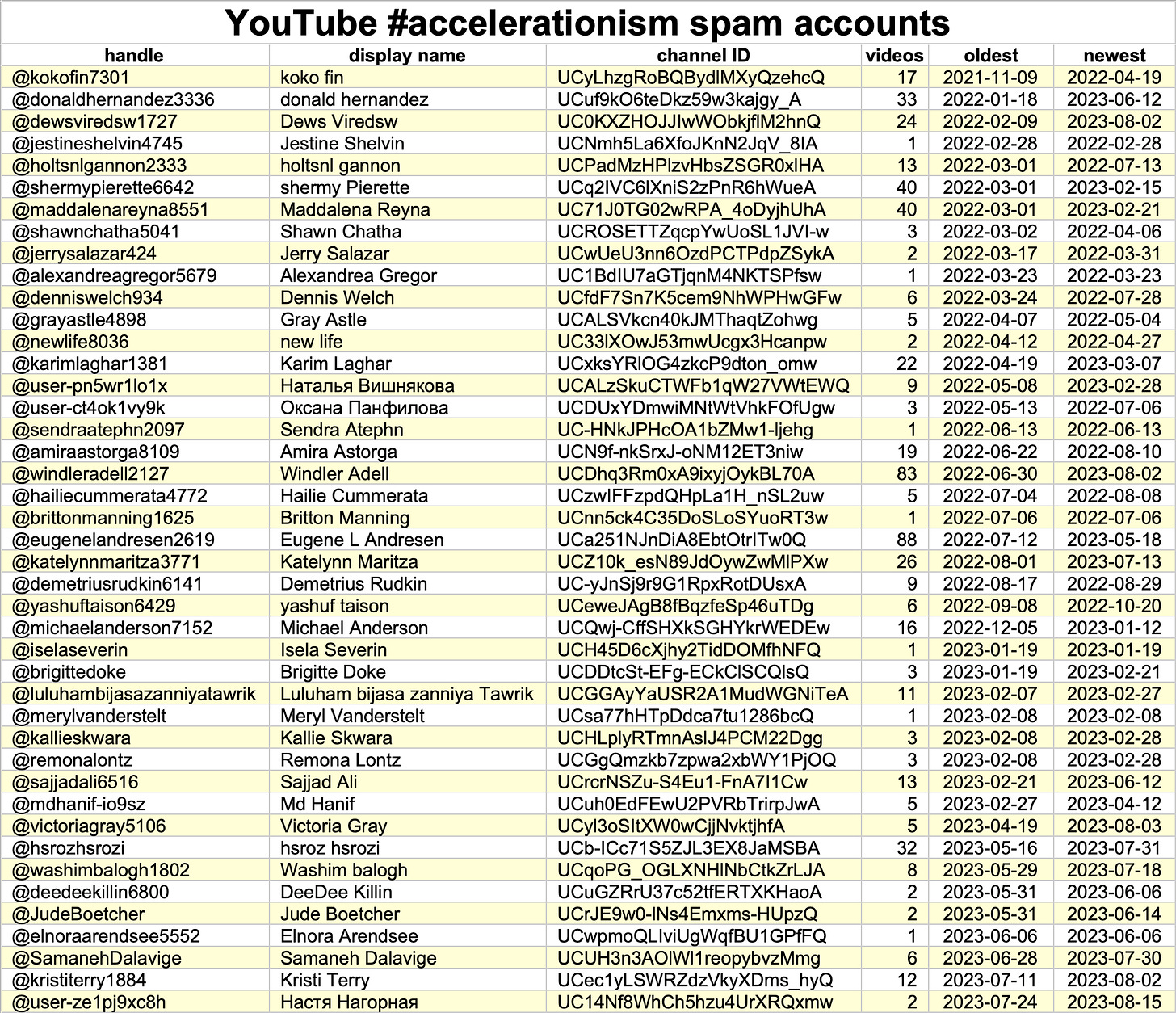

The earliest activity from this set of #accelerationism spam accounts was in November 2021. (#Accelerationism spam has been present on other platforms, such as X/Twitter since 2020, possibly earlier.) Almost all of these 43 spam accounts have channel names that consist of a first name and a last name, which is a somewhat rarer naming convention for YouTube channels than for personal accounts on social media platforms such as Facebook, Instagram, or X/Twitter. These accounts have posted a total of 585 videos, not including shorts.

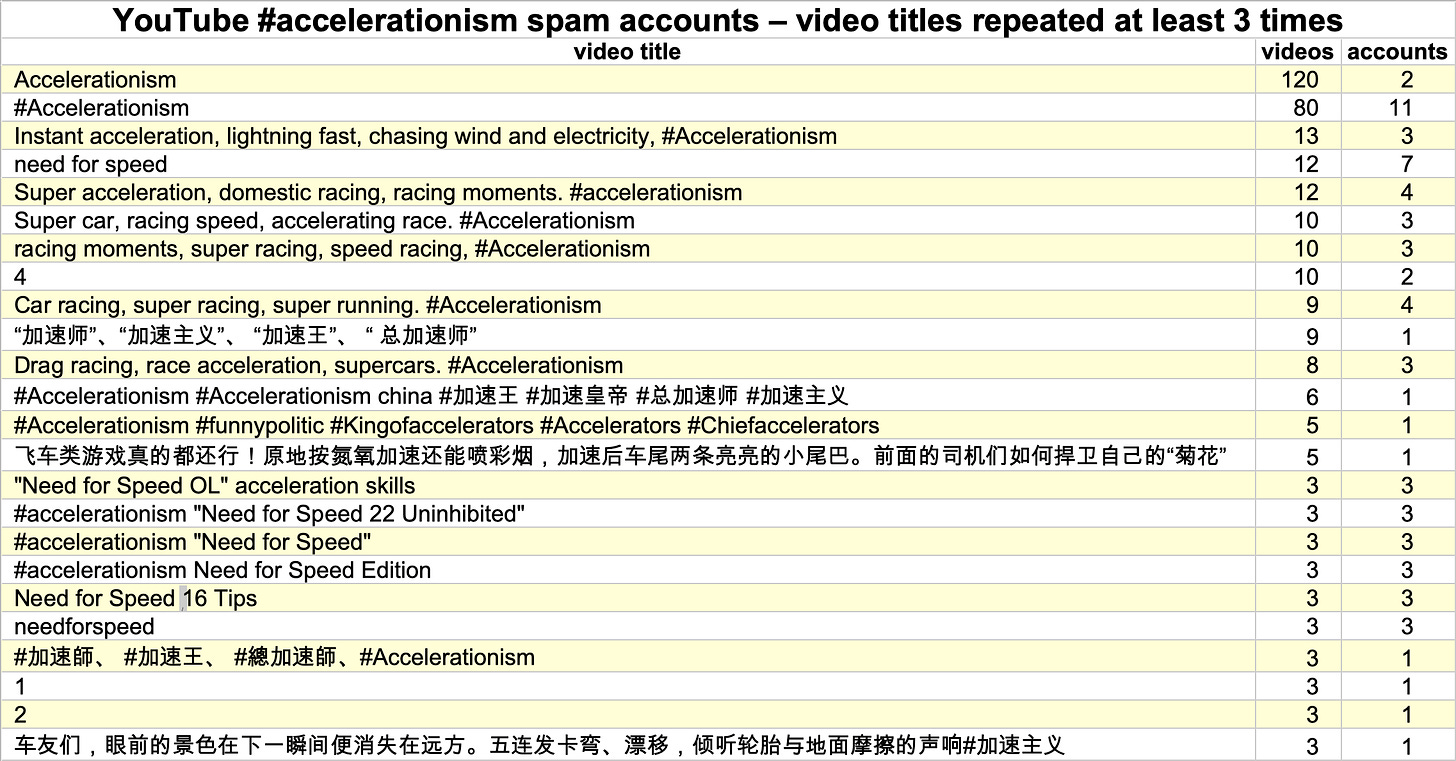

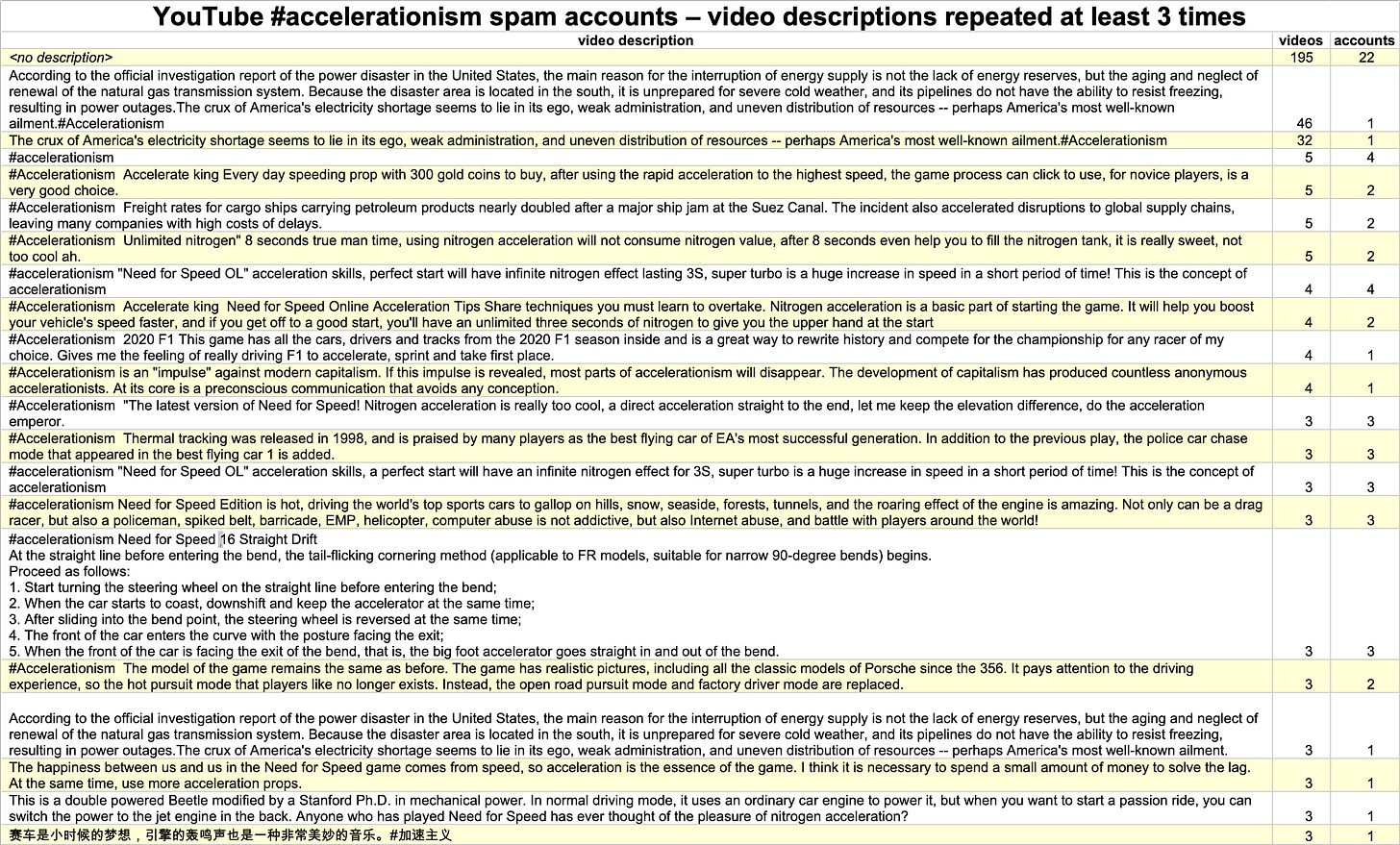

Repetition is a hallmark of spam networks on any social media platform, and these #accelerationism YouTube spam accounts are no exception. Both titles and descriptions are repeated, sometimes reused over and over by a single account and on other occasions duplicated verbatim by multiple accounts. Much of the repeated text is related to the Need for Speed video game franchise, with political material thrown in here and there. The video content is repetitive as well, as quickly becomes obvious when scrolling through the accounts’ feeds.

import bokeh.plotting as bk

import bokeh.palettes as pal

in_file = "youtube_accelerationism_spam.json"

title = "YouTube #accelerationism spam network - videos posted per day"

videos = pd.read_json (in_file)

videos["t"] = pd.to_datetime (videos["publish_date"]).dt.date

g = videos.groupby ("t")

df = pd.DataFrame ({"count" : g.size ()}).reset_index ()

p = bk.figure (plot_width=1200, plot_height=600, title=title,

x_axis_label="Date", y_axis_label="Number of videos",

x_axis_type ="datetime",

y_range=[0, 1.08 * df["count"].max ()])

p.quad (top=df["count"], bottom=0, left=df["t"],

right=df["t"] + pd.Timedelta (days=1),

color="#df1030", alpha=0.8333)

p.xaxis[0].ticker.desired_num_ticks = 14

p.xaxis[0].formatter.months = ["%m/%Y"]

p.xaxis.axis_label_text_font_size = "12pt"

p.yaxis.axis_label_text_font_size = "12pt"

p.yaxis.major_label_text_font_size = "12pt"

p.xaxis.major_label_text_font_size = "12pt"

p.xaxis[0].ticker.desired_num_ticks = 8

p.title.text_font_size = "13pt"

p.title.align = "center"

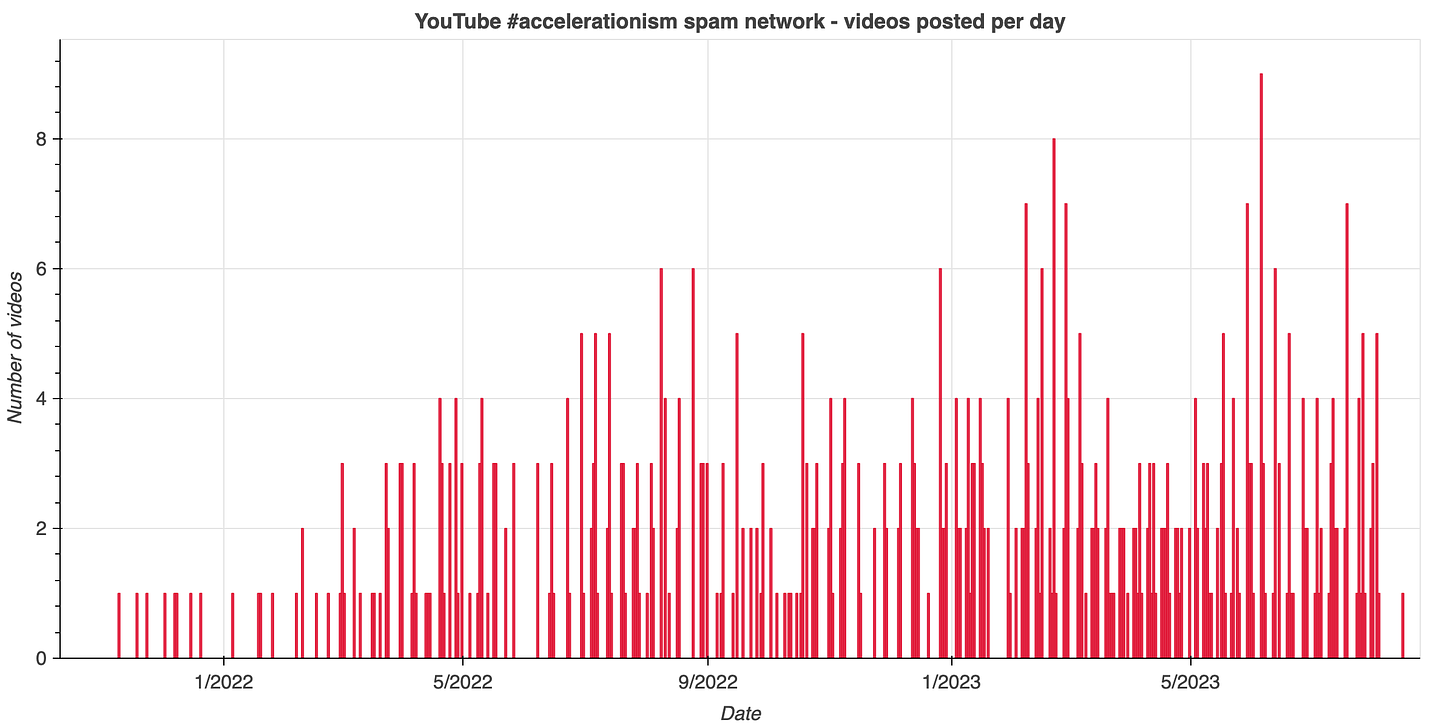

bk.show (p)Since the downloaded YouTube data includes timestamps (or at least dates — exact times don’t appear to be available), we can graph the volume of #accelerationism spam activity over time, with the caveat that YouTube may have already taken down some accounts or excluded them from search results. The network’s output is relatively low; the 585 videos posted thus far were posted over a period of almost two years, with the volume slowly growing as the number of accounts increased. The network as a whole presently appears to take a couple of days a week off, although that may simply be an artifact of the overall slow rate of posting.

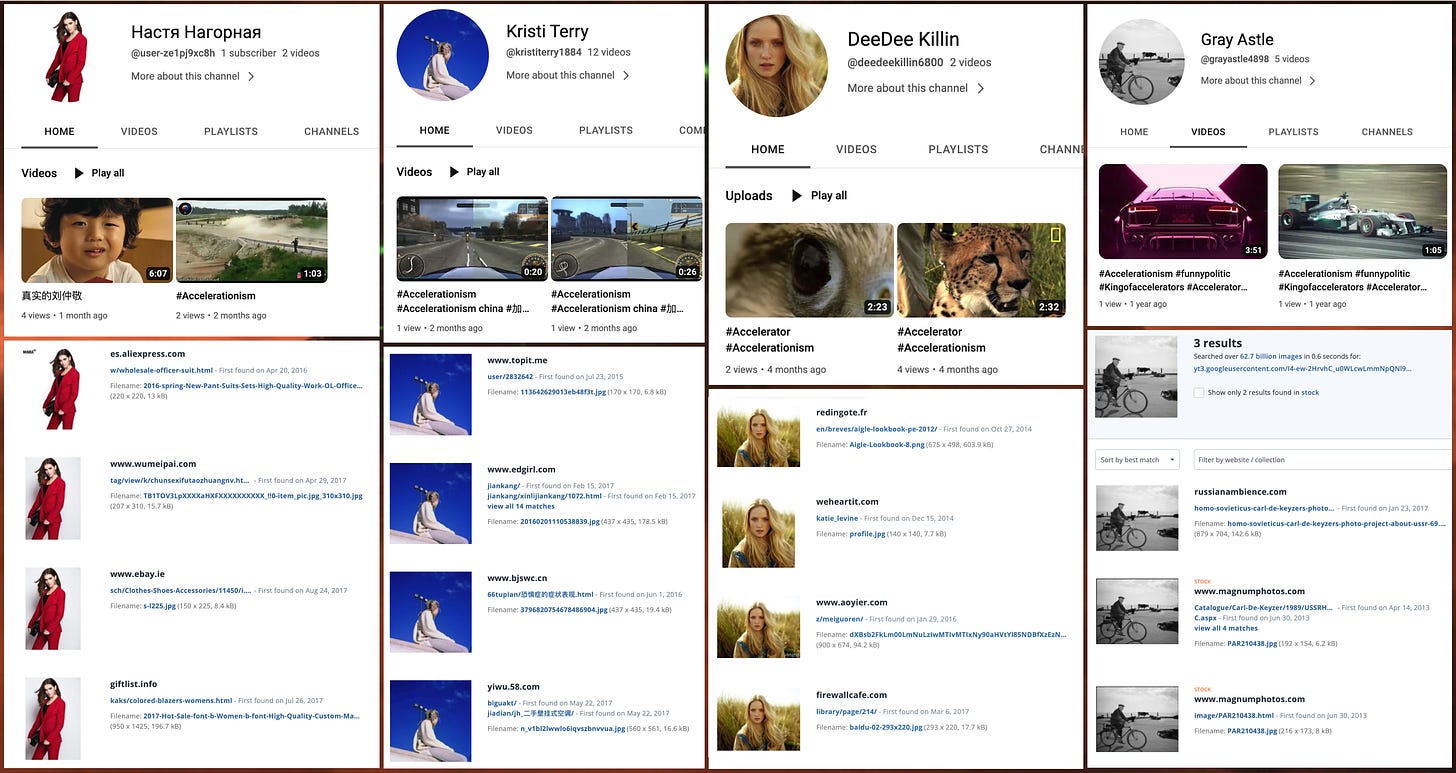

As a final note, it will come as no surprise to those of you who are familiar with spam networks that many of the YouTube #accelerationism spam accounts use plagiarized photos and stock images as avatars. (A few use YouTube’s default profile image, which is a circle containing the first letter of the channel name.) The use of stolen images is an additional data point indicating that this set of accounts is inauthentic.