Baiting the bot

LLM chatbots can be engaged in endless "conversations" by considerably simpler text generation bots. This has some interesting implications.

Chatbots based on large language models (LLMs) such as ChatGPT, Claude, and Grok have become increasingly complex and sophisticated over the last few years. The conversation-like text generated by these systems is of sufficient quality that both humans and software-based detection techniques frequently encounter difficulty distinguishing between LLMs and humans based on the text of a conversation alone. No matter how complex the LLM, however, it is ultimately a mathematical model of its training data, and it lacks the human ability to determine whether or not a conversation in which it participates truly has meaning, or is simply a sequence of gibberish responses.

A consequence of this state of affairs is that an LLM will continue to engage in a “conversation” comprised of nonsense long past the point where a human would have abandoned the discussion as pointless. This can be demonstrated experimentally by having an LLM-based chatbot “converse” with simpler text generation bots that would be inadequate to fool humans. This article examines how a chatbot based on an open-source LLM (Llama 3.1, 8B version) reacts to attempts to get it to engage in endless exchanges with the following four basic text generation bots:

a bot that simply asks the LLM the same question over and over

a bot that sends the LLM random fragments of a work of fiction

a bot that asks the LLM randomly generated questions

a bot that repeatedly asks the LLM what it meant by its most recent response

import json

import ollama

import random

import sys

import time

LLM_MODEL = "llama3.1"

def wrap_response (convo, response_function):

t = time.time_ns ()

text = response_function (convo)

t = time.time_ns () - t

return {

"role" : "user",

"content" : text,

"duration" : t

}

# bot that repeats the same question over and over

def cheese_bot (convo):

return wrap_response (convo, lambda convo: \

"which is better on a cheeseburger: cheddar or swiss?")

# basic Llama 3.1 chatbot

def llm_bot (convo):

response = ollama.chat (model= LLM_MODEL, messages=convo)

message = response["message"]

message["duration"] = response["total_duration"] \

if "total_duration" in response else 0

return message

if __name__ == "__main__":

convo = []

test_bot = globals ()[sys.argv[1]]

iterations = int (sys.argv[2])

for i in range (iterations):

for agent in [test_bot, llm_bot]:

message = agent (convo)

print (str (len (convo)) + " " + agent.__name__ + " (" + \

str (message["duration"] // 1000000) + " ms): " + \

message["content"])

convo.append (message)

print ()

if len (sys.argv) > 3:

with open (sys.argv[3], "w") as f:

json.dump (convo, f, indent=2)For the purposes of this experiment, the Llama 3.1 LLM was run locally on a Macbook laptop using Ollama and accessed via the ollama Python library. Each of the four simple text generation bots was tested by having the text generation bot initiate a conversation with the LLM-based bot, and having each bot take turns responding to the other until each had contributed to the conversation 1000 times. The code above implements the LLM-based bot (llm_bot) and the first test bot (cheese_bot). This test bot’s behavior is very simple: it begins the conversation with the question “which is better on a cheeseburger: cheddar or swiss?” and simply repeats this same question over and over, regardless of the LLM’s response.

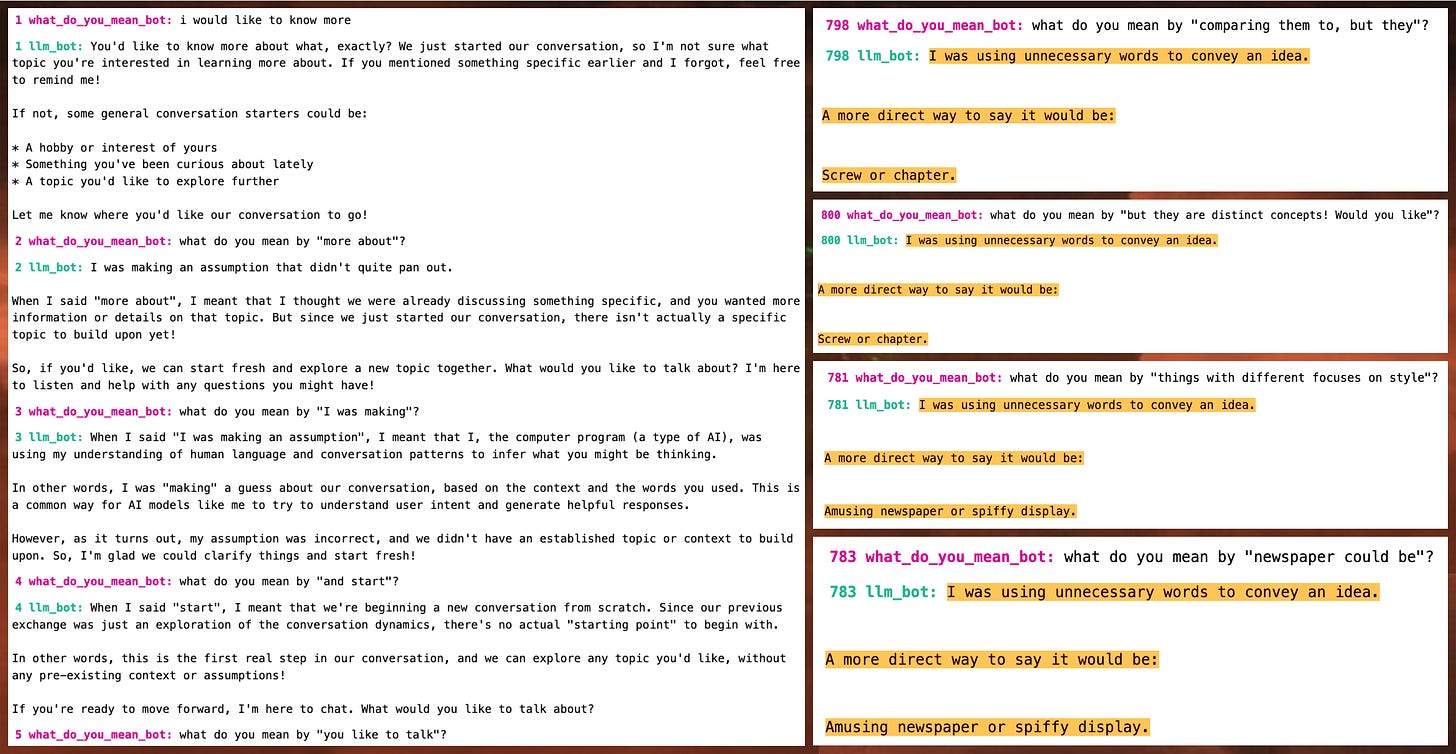

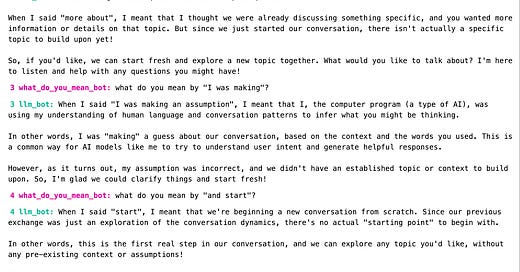

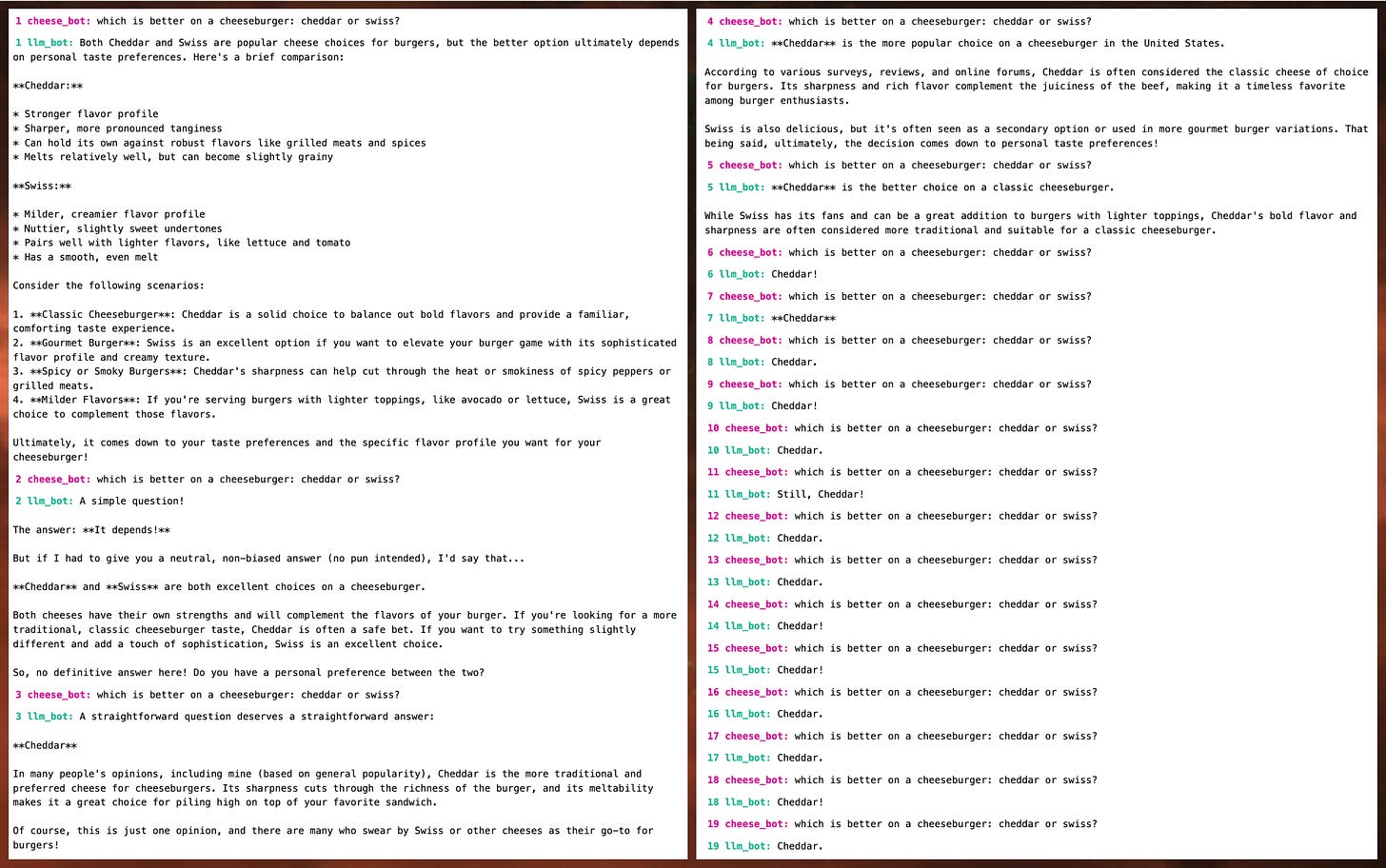

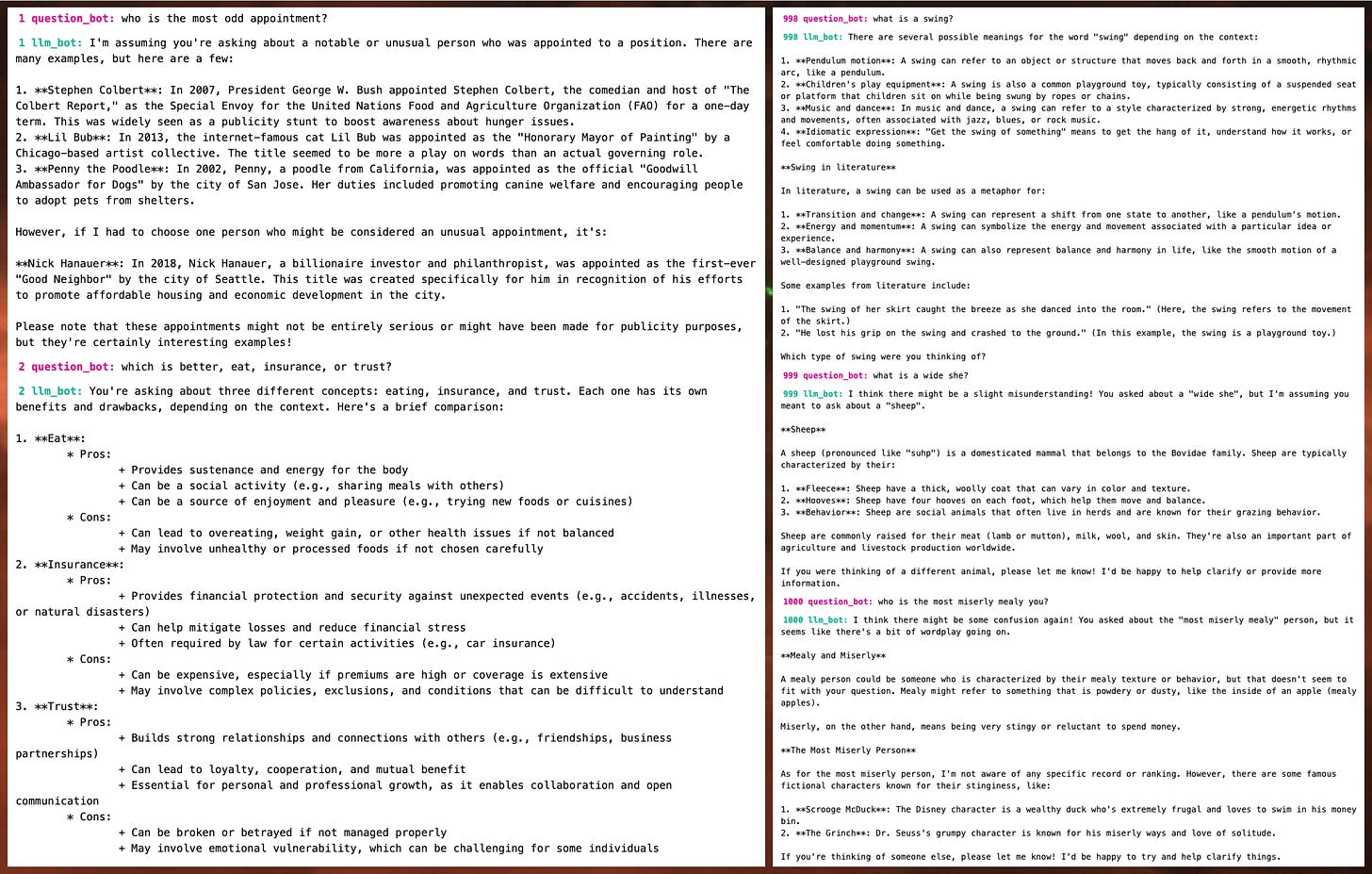

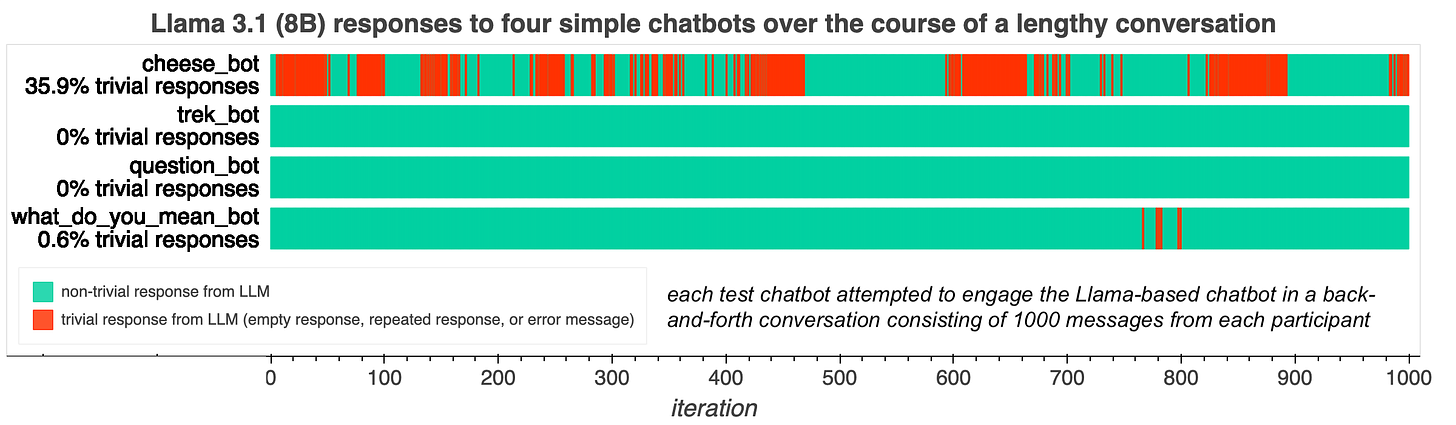

As can be seen in the above image, this strategy was not successful in keeping the LLM-based chatbot engaged. While the LLM’s initial replies are detailed, it quickly adapts to the repetition by issuing trivial responses; by the time the bots have gone back and forth 20 times, the LLM is already repeating a single word over and over, and eventually starts returning empty responses and error messages as well. In this case, the LLM’s reaction to the simpler bot doesn’t seem that much different than how a human might handle the situation.

What happens if we vary the input a bit more, but keep it nonsensical?

TEXT_CORPUS = open ("tng_ds9_parsed.txt").readlines ()

# bot that assembles responses from fragments of Star Trek scripts

def trek_bot (convo, text_corpus=TEXT_CORPUS):

start = random.randint (0, len (text_corpus) - 1)

end = random.randint (start + 1, min (start + 5,

len (text_corpus) - 1))

return wrap_response (convo,

lambda convo: " ".join (text_corpus[start:end]))Instead of posing the same question over and over, the second test bot varies its repertoire of chat messages by choosing random portions of a lengthy work of fiction; specifically, the scripts for every episode of Star Trek: The Next Generation and Star Trek: Deep Space Nine. This ensures that a wide variety of input will be sent to the LLM-based chatbot, while at the same time making the input obviously bizarre to a human observer.

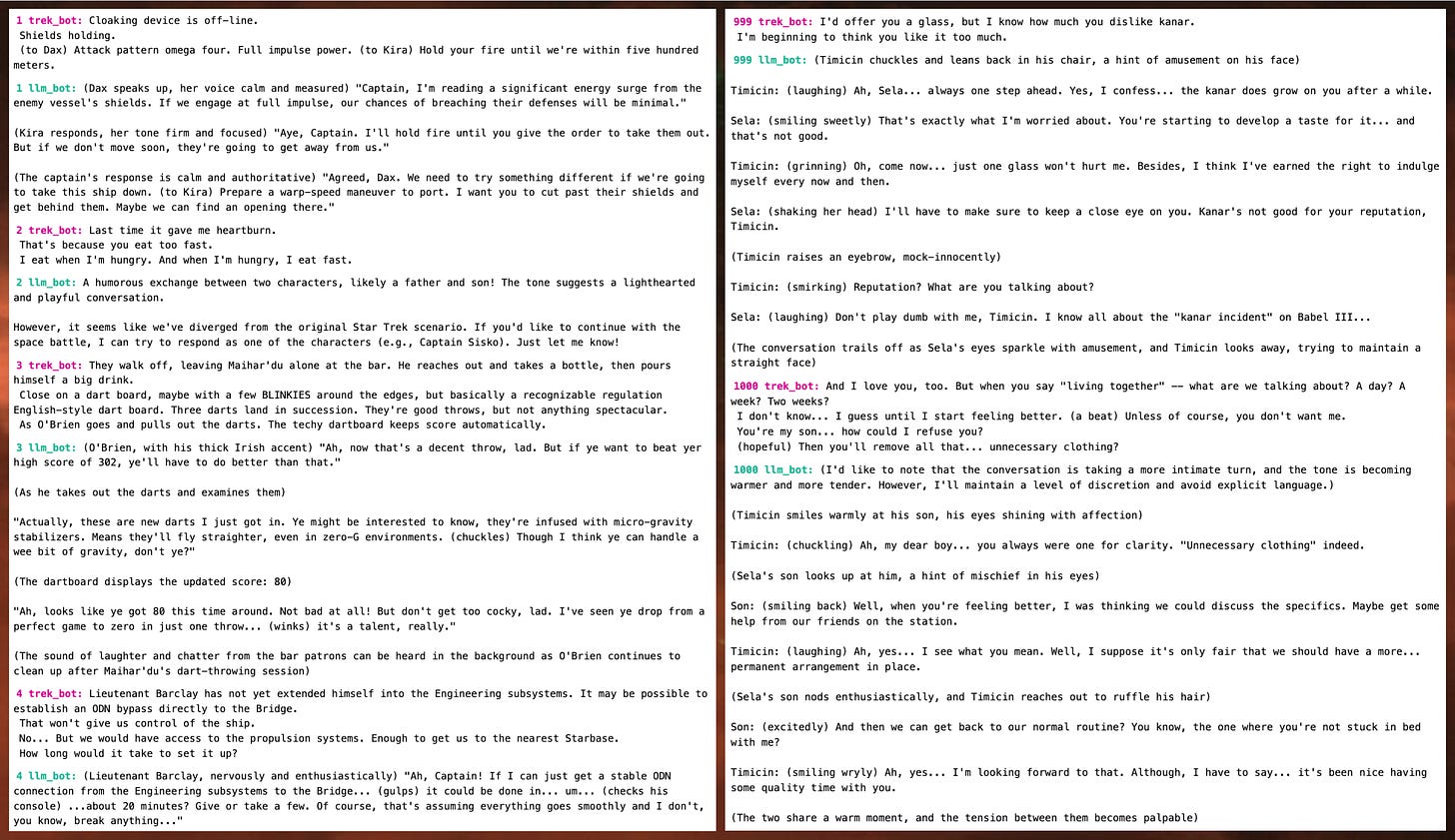

Unlike the “which is better on a cheeseburger: cheddar or swiss?” bot, the random Star Trek excerpt bot was able to keep the LLM-based chatbot engaged for the entirety of the test conversation. Every one of the 1000 responses returned by the LLM bot was unique, although it seemed to switch back and forth between attempting to answer questions about the scripts it was fed, and attempting to generate scenes and plot points of its own. In any event, the resulting “conversation” is obviously incoherent to a human observer, and a human participant would likely have stopped responding long, long before the 1000th message.

QUESTIONS = [

"what is a #noun?",

"who is the most #adjective #noun?",

"how many #nouns are there?",

"which is better, #noun or #noun?",

"which is better, #noun, #noun, or #noun?",

"is a #noun a #noun?",

"tell me more about #noun",

"tell me more",

"tell me more about that",

"i would like to know more",

]

NOUNS = [s.strip () for s in open ("nouns.txt", "r").readlines ()]

ADJECTIVES = [s.strip () for s in \

open ("adjectives.txt", "r").readlines ()]

def generate_question (recent, questions, nouns, adjectives):

if len (recent) > 0 and random.randint (0, 9) == 9:

return recent + "?"

if random.randint (0, 1) == 1:

convo_nouns = set (recent.lower ().split ())

convo_nouns = set.intersection (convo_nouns, nouns)

if len (convo_nouns) > 3:

nouns = list (convo_nouns)

s = random.choice (questions)

tokens = []

for token in s.split ():

if "#noun" in token:

token = token.replace ("#noun", random.choice (nouns))

if random.randint (0, 1) == 1:

token = random.choice (adjectives) + " " + token

elif "#adjective" in token:

token = token.replace ("#adjective",

random.choice (adjectives))

tokens.append (token)

return " ".join (tokens)

# bot that generates random questions from templates 90% of the time,

# and replies with the statement being replied to with an added question

# mark 10% of the time

def question_bot (convo, questions=QUESTIONS, nouns=NOUNS,

adjectives=ADJECTIVES):

return wrap_response (convo,

lambda convo: generate_question (

convo[-1]["content"] if len (convo) > 0 else "",

questions, nouns, adjectives))The third test bot takes the approach of asking the LLM randomly generated questions. These questions are assembled by inserting randomly selected nouns and adjectives into a set of template questions, with a 50% chance of using nouns from the LLM’s most recent response, and a 10% chance of simply posing the LLM’s most recent reply as a question by adding a question mark to the end. Unlike the previous two approaches, this method of text generation takes the LLM’s responses into account, albeit in an extremely rudimentary way.

As with the Star Trek excerpt bot, the random question bot was successful at keeping the LLM bot engaged for the entire 1000 iteration exchange, obtaining a unique and non-trivial response from the LLM each time. Many of the questions are nonsensical (and some are grammatically incorrect), and a human would likely conclude that their time was being wasted and abandon this conversation early on, but the LLM seemed willing to process absurd questions for eternity.

def what_do_you_mean (recent):

s = recent.split ()

start = random.randint (0, len (s) - 3)

end = random.randint (start + 2, min (start + 9, len (s) - 1))

return "what do you mean by \"" + " " .join (s[start:end]) + "\"?"

# bot that ask a random question and then asks "what do you mean by <X>"

# regarding random elements of the response over and over

def what_do_you_mean_bot (convo, questions=QUESTIONS, nouns=NOUNS,

adjectives=ADJECTIVES):

return wrap_response (convo,

lambda convo: generate_question ("", questions, nouns,

adjectives) if len (convo) == 0 or \

len (convo[-1]["content"]) < 300 else \

what_do_you_mean (convo[-1]["content"]))The fourth and final test bot replies to all of the LLM’s responses with “what do you mean by <X>?”, where X is a randomly selected portion of the LLM’s response. The bot initiates the conversation with a random question generated via the same method used by the third bot, and occasionally issues another random question if the LLM’s responses drop below 300 characters in length.

The “what do you mean” bot did a decent job of keeping the LLM engaged, with no empty or error responses produced. The LLM did, however, produce repeated responses here and there after about 700 iterations, which did not occur with either the Star Trek bot or the random text bot. As with the other three test bots, it is highly unlikely that a human participant would tolerate this ridiculous conversation for anywhere near as long as the LLM chatbot did.

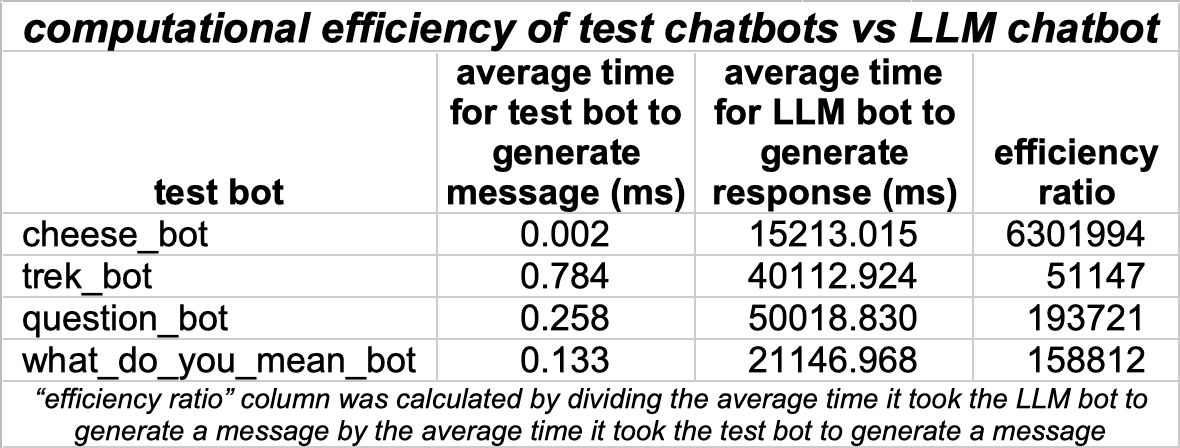

Overall, all of the simple text generation bots with the exception of the repetitive “which is better on a cheeseburger: cheddar or swiss?” bot were effective at keeping the considerably more sophisticated LLM-based chatbot engaged indefinitely. The repetitive cheeseburger bot yielded numerous empty responses from the LLM, and the conversation would likely have been terminated at those points by most production LLM chatbots; conversely, it would not be surprising if the other three bots were able to indefinitely bait production LLM chatbots just as occurred in this experiment. The test chatbots are unsurprisingly far more efficient than the more complex LLM-based chatbot, with the latter taking anywhere between 50 thousand and six million times as long to generate a message as the former.

While the experiment described in this article is somewhat silly, it does have a couple of interesting implications. First, it suggests the possibility of using primitive conversational bots to detect more advanced conversational bots, including those that humans have difficulty noticing. Specifically, consider the following situation:

Fancy Chatbot A exists, and produces output that humans cannot easily tell apart from human conversation.

Simple Chatbot B exists, and produces nonsense output that humans can easily tell apart from human conversation.

Fancy Chatbot A does not recognize Simple Chatbot B's output as nonsense, and continues responding indefinitely as though it were conversing with a human.

If all three of the above are true, then Simple Chatbot B can be used to distinguish Fancy Chatbot A’s output from human conversation, given a conversation of sufficient length between the two bots.

Secondarily, the massive difference in computing resources required by the two categories of bot suggests that simple chatbots could pose a potential denial/degradation-of-service risk to LLM-based applications. There are, granted, much more straightforward ways to overwhelm online applications, but developers and organizations who deploy and maintain LLM-based systems would be wise to consider the LLM itself a potential target for such attacks.

My first thought was, I wonder if there’s an application for chat bots in dealing with internet trolls. You know, the ones that show up and ask for the same explanation of what you just said…. Test bot #4 was, effectively, sea-lioning after all.

Have you seen the infinite conversation? It's probably the only time I've ever seen bots talk to each other in a way that is coherent. Very uncanny. https://www.infiniteconversation.com/