A simple Python scraper for infinite scroll websites

Many websites these days have basically the same user interface, which makes it easier to write general-purpose tools for harvesting publicly visible data from them

How should social media researchers go about gathering data in an era when major online platforms are removing or severely restricting the public APIs (application programming interfaces) formerly used by the aforementioned researchers to gather public data for analysis? The obvious alternative is to scrape content in some manner, which is generally accomplished by studying and replicating the internal API calls used by a given platform’s website and smartphone apps. While this technique can be quite effective, it suffers from the problem that scrapers of this sort need to be tailored to each website one desires to scrape.

An alternative approach: automate a web browser using a tool such as Selenium, navigating the site as a user would, and parsing out desired text and other data from the HTML displayed in the browser. This approach allows one to take advantage of the fact that many modern sites have more or less the same user interface: a scrollable list of items that loads additional items when the user scrolls up or down (sometimes referred to as infinite scroll). By automating the process of scrolling a large number of items into view and then parsing them out, we can write a simple albeit clunky web scraper that works with many (but not all) infinite scroll websites.

# SCRAPER FOR INFINITE SCROLL SITES

import bs4

import json

from selenium.webdriver import FirefoxOptions

from selenium.webdriver.common.by import By

from selenium import *

import sys

import time

def matches (e1, e2):

if e1 == e2:

return True

e1 = e1.split ()

e2 = e2.split ()

if e1[0] != e2[0]:

return False

return len (set.intersection (set (e1[1:]), set (e2[1:]))) > 0

def extract_posts (node):

prev = None

items = []

best = []

chains = {}

for e in node.find_all (recursive=False):

cl = " ".join (e["class"]) if e.has_attr ("class") else None

name = e.name if cl is None else \

(e.name + " " + cl)

if prev and not matches (name, prev):

if len (items) > len (best):

best = items

if len (items) > 5:

chains[prev] = items

items = chains[name] if name in chains else []

items.append (e)

child_items = extract_posts (e)

if len (child_items) > len (best):

best = child_items

prev = name

if len (items) > len (best):

best = items

return best

def extract_attr (soup, tag, attr):

return [e[attr] for e in filter (lambda e: e.has_attr (attr),

soup.find_all (tag))]

def filter_absolute (urls):

return list (filter (lambda u: u.startswith ("http"), urls))

def get_posts (driver, url, max_time=300,

include_raw=False, extract_fields=True,

relative_urls=False):

print ("downloading " + url)

driver.get (url)

old_height = -1

height = 0

start_time = time.time ()

while height > old_height and time.time () - start_time <= max_time:

old_height = height

for i in range (8):

driver.execute_script (

"window.scrollTo(0, document.body.scrollHeight);")

time.sleep (2)

height = driver.execute_script (

"return document.body.scrollHeight")

if height > old_height:

break

old_height = -1

while height > old_height and time.time () - start_time <= max_time:

old_height = height

for i in range (8):

driver.execute_script ("window.scrollTo(0, 0);")

time.sleep (2)

height = driver.execute_script (

"return document.body.scrollHeight")

if height > old_height:

break

t = time.time ()

print ("scroll time: " + str (int (t - start_time)) + " seconds")

time.sleep (15)

posts = None

start_time = t

soup = bs4.BeautifulSoup (driver.page_source, "html.parser")

posts = extract_posts (soup)

if posts:

results = []

for post in posts:

text = post.get_text ()

item = {

"text" : text

}

if include_raw:

item["raw"] = str (post)

if extract_fields:

urls = set ()

images = set ()

datetimes = set ()

for s in (post, bs4.BeautifulSoup (text,

"html.parser")):

urls.update (extract_attr (s, "a", "href"))

images.update (extract_attr (s, "img", "src"))

datetimes.update (extract_attr (s,

"time", "datetime"))

urls = list (urls)

images = list (images)

if not relative_urls:

urls = filter_absolute (urls)

images = filter_absolute (images)

item["urls"] = urls

item["images"] = images

item["datetimes"] = list (datetimes)

results.append (item)

print ("parse time: " + str (int (time.time () - start_time)) \

+ " seconds")

return results

else:

print ("failed to parse document")

return None

def download (driver, urls, out_path, max_time=300,

include_raw=False, extract_fields=True,

relative_urls=False):

if not out_path.endswith ("/"):

out_path = out_path + "/"

for url in urls:

results = get_posts (driver, url, max_time=max_time,

include_raw=include_raw, extract_fields=extract_fields,

relative_urls=relative_urls)

url = url[url.find ("//") + 2:]

url = url.replace ("/", "_").replace ("?", "-")

fname = out_path + url + ".json"

with open (fname, "w") as f:

json.dump (results, f, indent=2)

print (str (len (results)) + " results written to " + fname)The Python code above implements a relatively simple algorithm for scraping infinite scroll websites:

Open a given URL in a browser and repeatedly scroll to the bottom until at least 16 seconds goes by without the height of the document increasing or until a specified maximum duration has elapsed.

Repeatedly scroll to the top until at least 16 seconds goes by without the height of the document increasing or until a specified maximum duration has elapsed.

Wait 15 seconds for the page to finish loading.

Recursively explore the DOM (document object model) and find the largest list of elements at the same depth with the same tag name and either no class attribute or some overlap in classes.

Extract the text, any links (

hrefattribute of<a>tags), any image URLs (srcattribute of<img>tags), and datetimes (datetimeattribute of<time>tags) and store them in a text file in JSON format.

import warnings

warnings.filterwarnings ("ignore")

# run Firefox using Tor as proxy

options = FirefoxOptions ()

options.set_capability ("proxy", {

"proxyType": "manual",

"socksProxy": "127.0.0.1:9150",

"socksVersion": 5

})

driver = webdriver.Firefox (options=options)

test_urls = [

"https://duckduckgo.com/?q=toads&kav=1&ia=web",

"https://t.me/s/DDGeopolitics",

"https://patriots.win/new",

"https://gab.com/a",

"https://newsie.social/@conspirator0",

]

download (driver, test_urls, "scrape_output/")

driver.quit ()

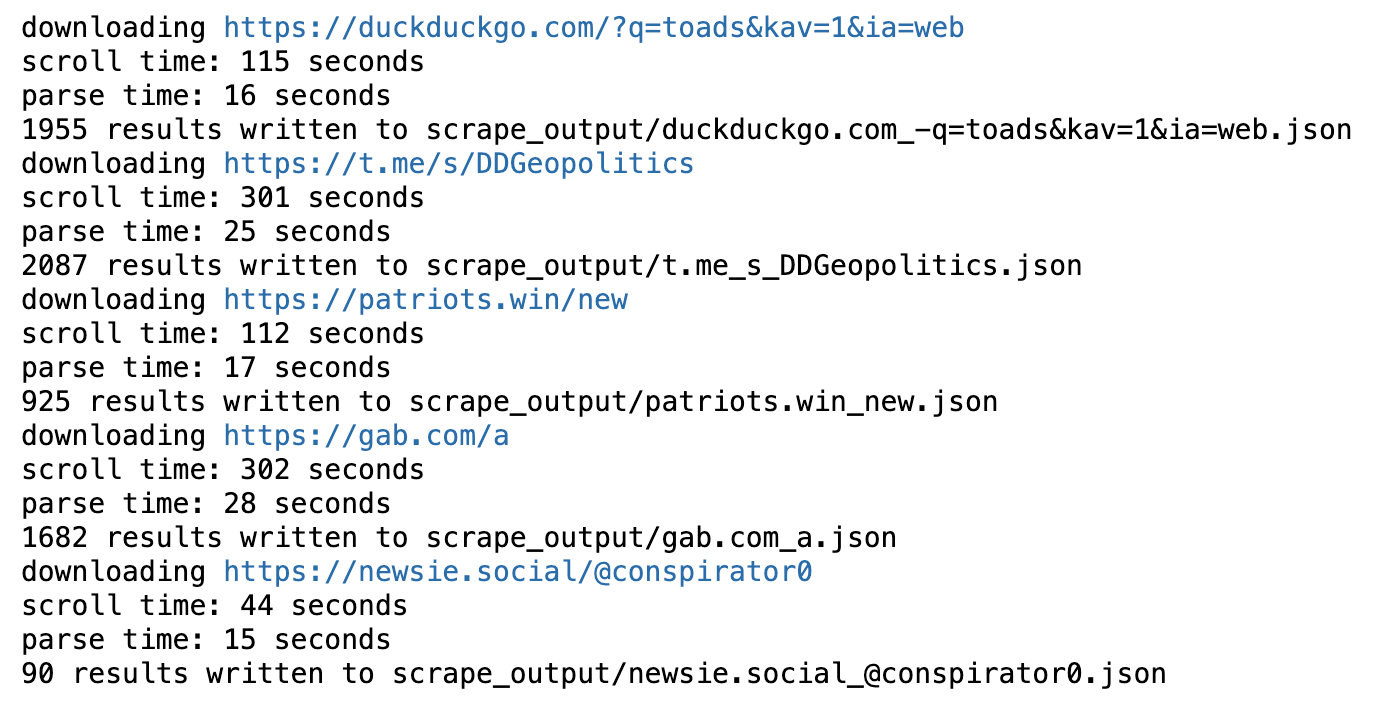

The code snippet and image above show the process of scraping five different infinite scroll feeds for up to five minutes each: DuckDuckGo search results for the word “toad”, pro-Russia Telegram channel “DD Geopolitics” (founded by Sarah Bils aka “Donbass Devushka”), pro-Trump forum patriots.win (the successor to the /r/The_Donald subreddit), Gab founder Andrew Torba’s Gab account (this scrape failed occasionally), and my own Mastodon account. This yielded 1955 DuckDuckGo search results, 2087 Telegram posts, 925 patriots.win threads, 1682 Gab posts, and 90 Mastodon posts, respectively. The scrolling phase of scraping both the “DD Geopolitics” Telegram channel and Torba’s Gab account reached the five minute time limit, so it’s possible more posts could be gathered from both of those sources by increasing this limit.

This scraper works on a variety of sites, but there’s plenty of room for improvement. The algorithm for determining which portion of the document is the list of posts is primitive, and will sometimes incorrectly identify something like a list of countries as the list of items to scrape, particularly when harvesting small numbers of posts. The set of fields extracted is relatively minimal and relies on web developers using elements correctly, and adding more sophisticated parsing to detect additional fields such usernames would be an obvious enhancement. The code as presented in this article also doesn’t work with sites that require one-time user interaction prior to scrolling such as logging in or completing a CAPTCHA; such behaviors, if desired, are left to the reader to implement.

Library versions used: selenium 4.15.2, bs4 (Beautiful Soup) 4.11.1