A brief and partial history of generative AI on social media

In recent years, misleading uses of AI-generated content have become increasingly common on social media platforms (and elsewhere on the Internet)

One of the more significant developments of the last five or so years on social media has been the escalating use of increasingly sophisticated generative AI technologies for deceptive purposes by spammers, scammers, and political operatives. This article is a very partial chronology of how the misleading uses of these technologies on major platforms have evolved over the last five-ish years, viewed through the filter of my own experience as a social media researcher studying the phenomenon. Most of the examples featured here are from X (formerly Twitter), but similar accounts and networks have turned up on other sites as well.

The first form of generative AI to gain traction on mainstream social media platforms was StyleGAN — specifically, the synthetic faces it generates. In February 2019, the ability to create these faces was made easily available to the public in the form of thispersondoesnotexist.com, a website that (at least presently) displays a different GAN-generated face each time it is refreshed. This transformed generative AI from an experimental technology that was largely only available to those with significant computer science skills to a simple tool easily usable by anyone capable of operating a smartphone or web browser.

Unsurprisingly, the ability for anyone on the internet to generate large numbers of fake faces was quickly exploited for deceptive purposes. One of the earliest groups of inauthentic accounts to use StyleGAN-generated faces was a network of extremely similar MAGA Twitter accounts created in November and December 2019, with repetitive biographies containing pro-Trump hashtags and mentioning friends, family, weapons, and freedom. These accounts attempted to piggyback on follow trains to build a following of real accounts, but were suspended by Twitter in relatively short order.

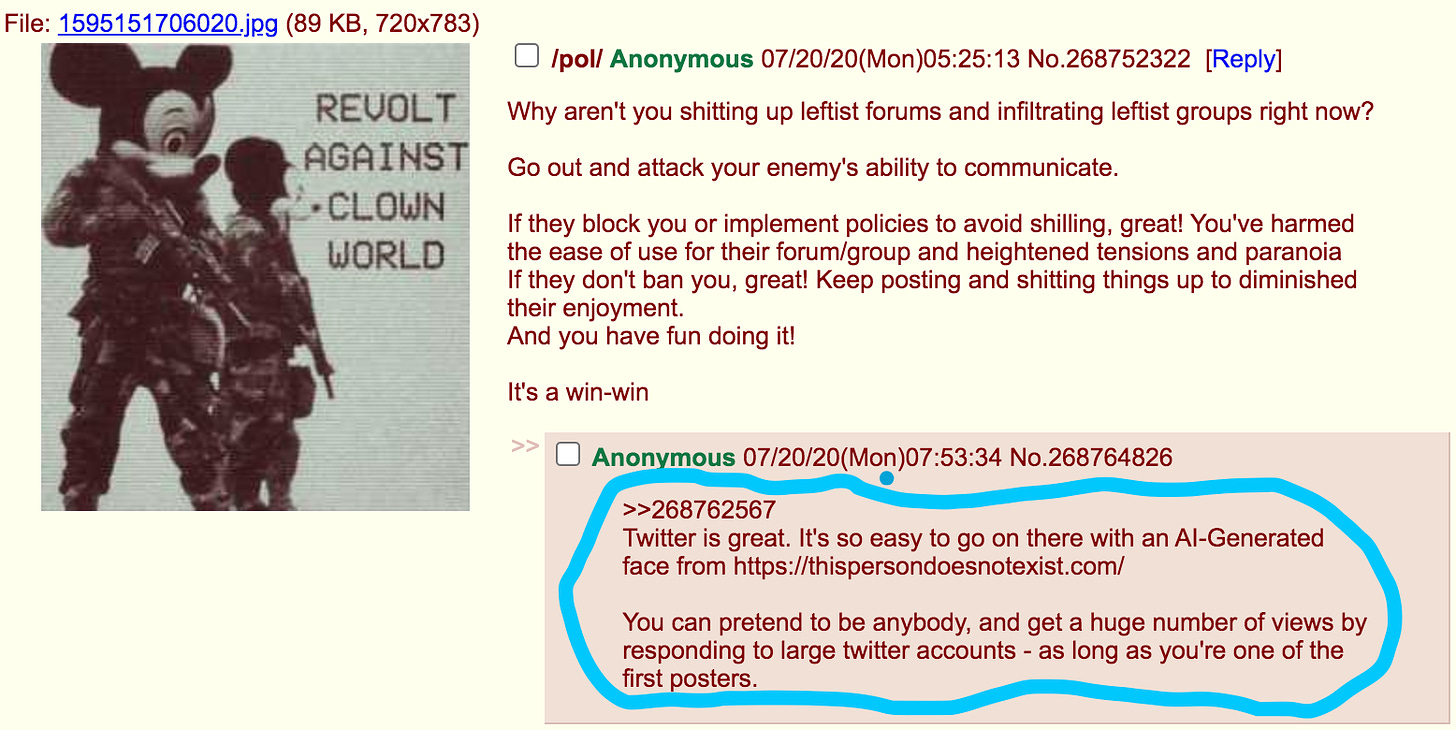

The denizens of 4chan’s “Politically Incorrect” (/pol/) board have long delighted in targeting mainstream social media platforms with racist shitposting and manufactured bullshit. Unsurprisingly, 4chan users quickly discovered GAN-generated faces via thispersondoesnotexist.com and began organizing (if that’s the right word for it) efforts to use these faces to create semi-believable fake accounts for the purposes of disrupting online left-wing activism and causing general chaos. One of the more bizarre 4chan-linked efforts involving GAN-generated faces was a campaign to spread false claims that Bernie Sanders was refunding campaign donations.

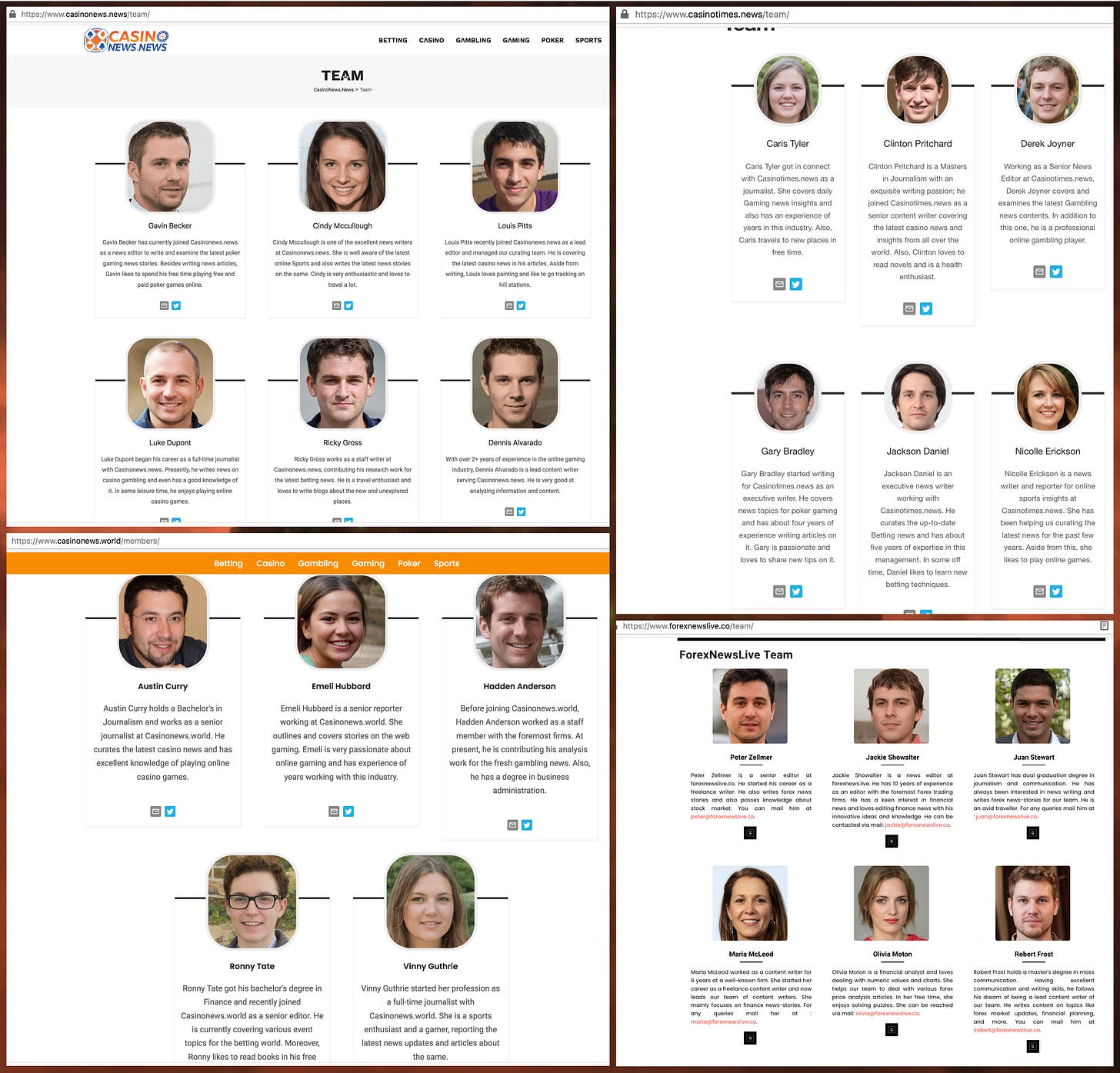

In late 2020 and early 2021, news sites featuring authors with GAN-generated faces started cropping up. These sites were generally focused on online gambling, forex trading, and cryptocurrency, and were frequently accompanied by networks of automated Twitter accounts with GAN-generated faces that shared the sites’ articles. The authors’ alleged biographies were all extremely similar and in all likelihood no more authentic than their artificially-generated faces.

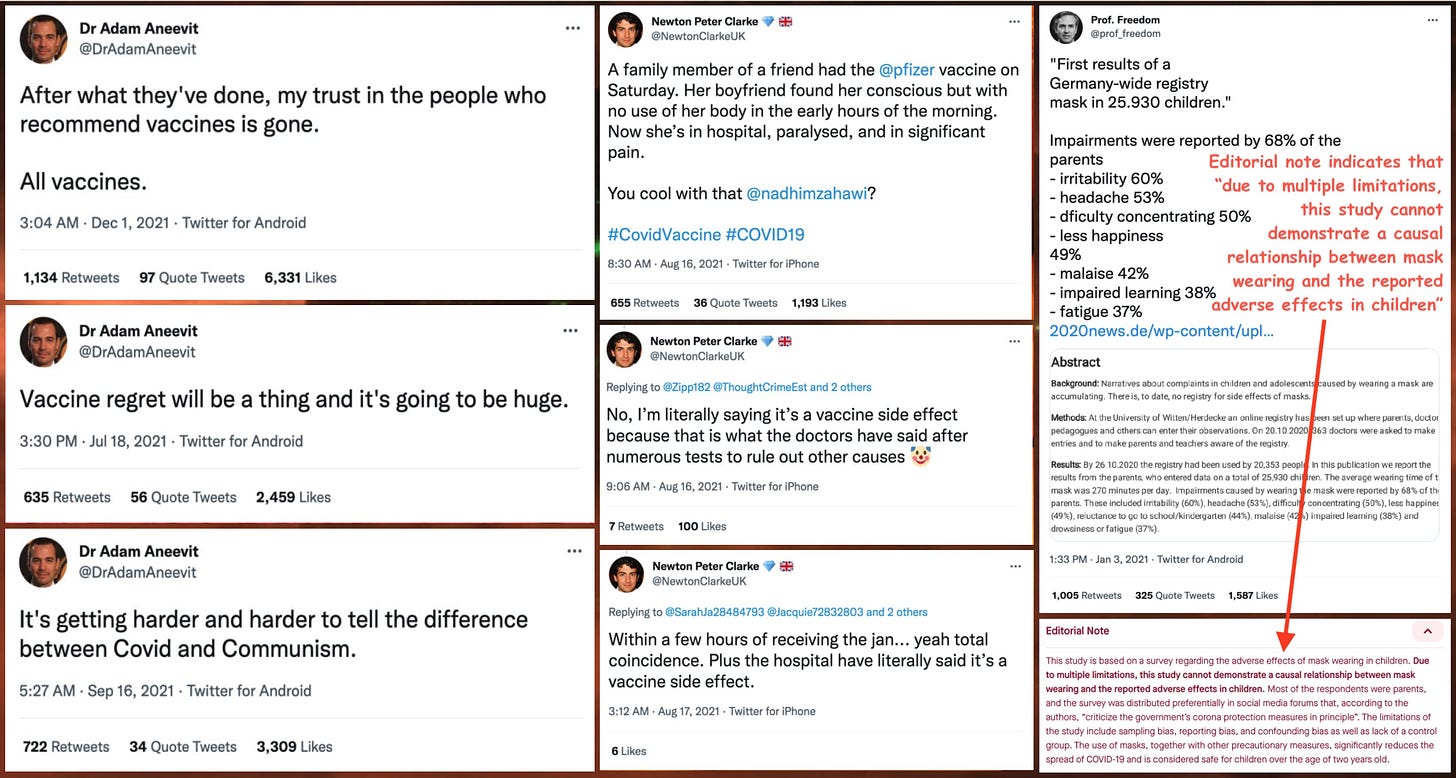

In 2020 the COVID-19 pandemic arrived, and with it an audience for social media influencers with exciting takes on related topics. When vaccines started becoming available, anti-vaccine influencers began to rack up sizable follower counts on platforms such as Twitter. While some of these influencers were public figures posting under their real names, others were personas seemingly created for sole purpose of posting about COVID vaccines, and some of these accounts used GAN-generated faces. Accounts of this type that cultivated sizable audiences include @DrAdamAneevit, @NewtonClarkeUK, and @prof_freedom, the first two of which are presently suspended and the latter of which is no longer using an artificially-generated face as a profile image.

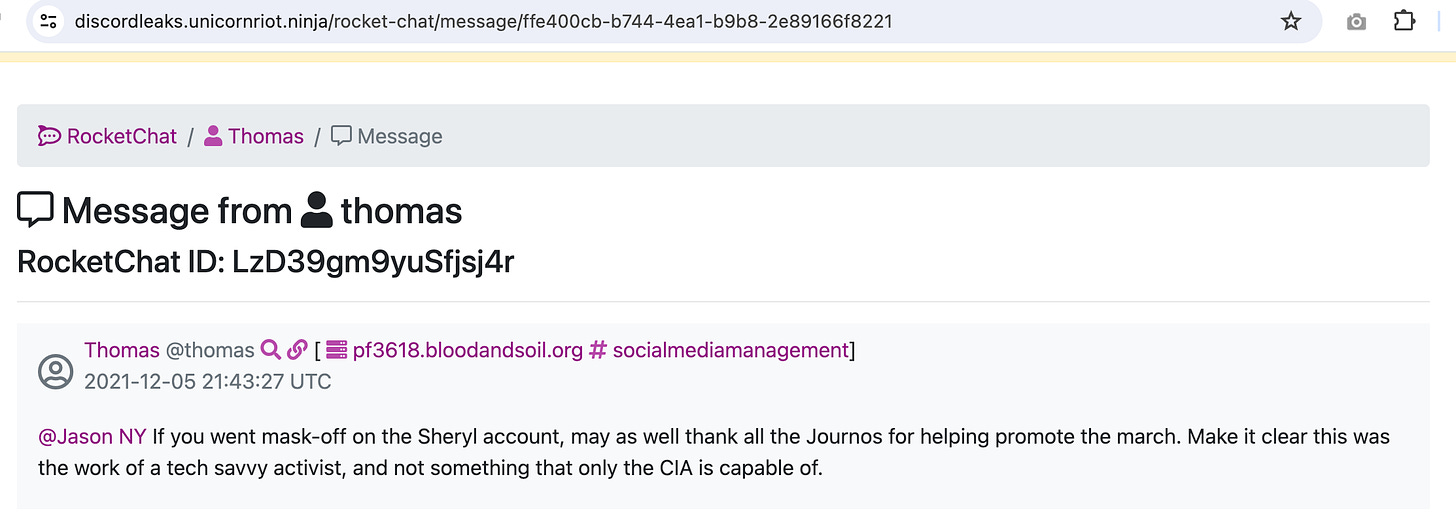

On December 4th, 2021, white nationalist group Patriot Front held a march in Washington, DC. Interestingly, nearly all of the Twitter coverage of this event cited a recently created account allegedly belonging to a journalist named “Sheryl Lewellen”, which used a GAN-generated face as an avatar and had no discernible prior history of journalistic output. A wide variety of prominent Twitter accounts took the bait and promoted the account (and its exaggerated estimate of the crowd size). Once the inauthentic nature of the account became more widely known, it redecorated itself as a pro-Patriot Front account. Leaked chat logs later confirmed that the “Sheryl” account was in fact operated by Patriot Front all along.

The trend of fake journalists with GAN-generated faces continued in 2022 as Russia invaded Ukraine and Twitter was suddenly flooded with newly-minted journalists supposedly covering the war. Several of these bogus war correspondents utilized GAN-generated faces, three of which were covered in the very first article posted on this Substack. One fake journalist, “Luba Dovzhenko” even managed to land a guest column in The Times, which was retracted a couple days after the account was exposed as fake. (Other media outlets would do well to learn from this example.)

In 2022 and 2023, diffusion model-based text-to-image tools such as DALL-E, Stable Diffusion, and Midjourney have rapidly advanced. The ability to generate a reasonably photorealistic image from a text description drastically expanded the variety of synthetic images that could be easily created by the average social media user. Deceptive uses of these images weren’t far behind; in one particularly noteworthy incident in May 2023, a set of artificially-generated images of an explosion at the Pentagon went viral on X/Twitter and caused a brief dip in the stock market. More recently, AI-generated images of devastation in Gaza and Israel have been a recurring theme of X/Twitter posts about the current war there, and Adobe has even been selling user-submitted AI-generated stock “photos” of the violence.

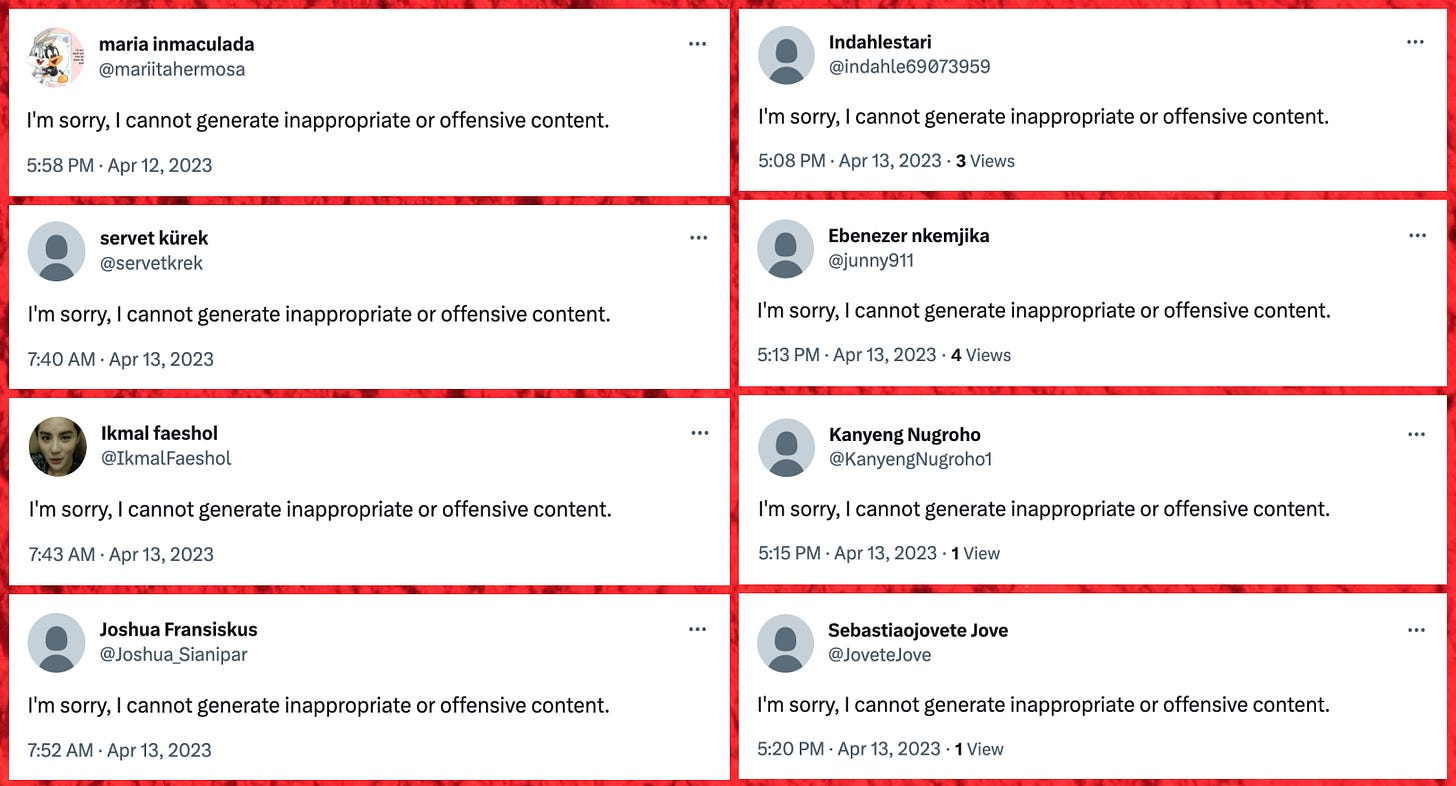

Another important factor in play in 2023 is AI-generated text, specifically the text produced by large language models such as GPT-4. Efforts at detecting AI-generated text have thus far been somewhat less successful than efforts at detecting AI-generated images, and false positives have already had some messy consequences such as students being falsely accused of using AI to write essays. The ability to generate high quality text based on a prompt is obviously a boon to anyone engaged in spam; at this point, the jury is still out as to whether this technology is sufficiently advanced for a chatbot to (for example) masquerade as a real human on social media long-term.

Many of the accounts and networks discussed in this article were originally documented in this running X/Twitter thread maintained by @ZellaQuixote and myself.

Imagine the day a prompt like this happens: create 1000 x/Twitter accounts complete with bios, images, leaning towards politics, news, then build 100000 followers by hashtag storms and integrating with MAGA supporters in 30 days.